Deep learning for analysing synchrotron data streams

Citation

Wang, B.; Guan, Z.; Yao, S.; Qin, H.; Nguyen, M.H.; Yager, K.G.; Yu, D. "Deep learning for analysing synchrotron data streams"

New York Scientific Data Summit 2016,

16 7747813.

doi: 10.1109/NYSDS.2016.7747813Summary

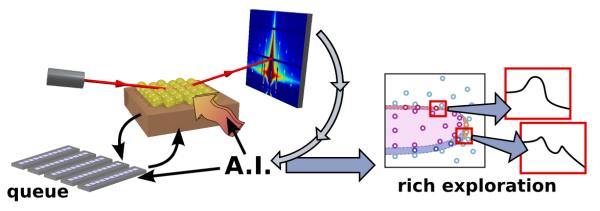

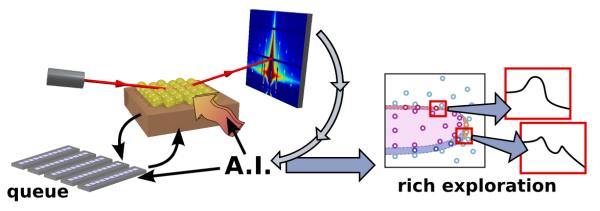

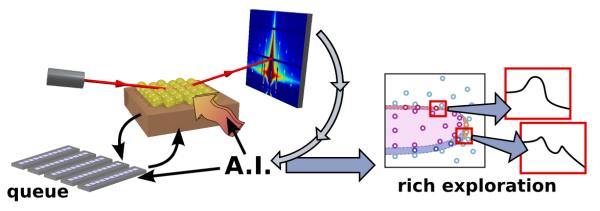

We demonstrate preliminary results on the use of deep learning methods to automatically tag x-ray scattering images.

Abstract

The National Synchrotron Light Source II (NSLS-II) at Brookhaven National Laboratory (BNL) is now providing some of the world's brightest x-ray beams. A suite of imaging and diffraction methods, exploiting megapixel detectors with kilohertz frame-rates at NSLS-II beamlines, generate a variety of image streams in unprecedented velocities and volumes. A complete understanding of a complex material system often requires a cluster of x-ray characterization tools that can reveal its elemental, structural, chemical and physical properties at different length-scales and time-scales. The flourish and continuing refinement of x-ray probes enable that the same sample may be studied with different perspectives and granularities, and at different time and locations; these powerful tools generate a correspondingly daunting big data challenge, with multiple image streams that outpaces any manual efforts and traditional data analysis practice. In this paper, we applied deep learning methods, in particular, deep convolutional neural network (CNN) to automatically recognize image features from image streams from NSLS-II, and integrated our deep-learning methods into the Google Tensorflow to cluster and label both real and synthetic 2-D scattering image patterns. These methods would empower scientists by providing timely insights, allowing them to steer experiments efficiently during their precious x-ray beamtime allocation. Experiment shows that the CNN-based image labeling attains a 10% improvement over traditional K-mean and Support Vector Machine.