OpenAI

- Dec 5: o1 is out of preview. The updated o1 is faster (uses fewer tokens) while improving performance. And they have introduced a “Pro” version of o1 (thinks for even longer).

- Here’s an example from a biomedical professor about o1-pro coming up with a legitimately useful and novel research idea.

- Dec 5: There is now a ChatGPT Pro tier, $200/month for unlimited access to all the best models (including o1 Pro).

- Dec 6: Reinforcement Fine-Tuning Research Program. Selected orgs will be able to RL OpenAI models for specific tasks. This is reportedly much more sample-efficient and effective than traditional fine-tuning. It will be reserved for challenging engineering/research tasks.

- Dec 9: Sora officially released (examples).

- Dec 10: Canvas has been improved and made available to all users.

- Dec 11: ChatGPT integration into Apple products.

- Dec 12: ChatGPT can pretend to be Santa.

- Google releases Gemini 2.0.

- New “Deep Research” feature can search the web and pull together a coherent research report.

- Imagen 3 and Veo image and video models are now available on Googl’es Vertex cloud platform.

- Multimodal Live API in Google AI Studio. You can share your webcamera or screen to allow it to provide more directed help. (Example of using it as a research assistant.)

Research Insights

- Google DeepMind: Mastering Board Games by External and Internal Planning with Language Models. Search-based planning is used to help LLMs play games. They investigate both externalized search (MCTS) and internalized (CoT). The systems can achieve high levels of play. Of course the point is not to be better than a more specialized/dedicated neural net trained on that game; but to show how search can unlock reasoning modalities in LLMs.

- Training Large Language Models to Reason in a Continuous Latent Space. Introduces Chain of Continuous Thought (COCONUT), wherein you directly feed the last hidden state as the input embedding for the next token. So instead of converting to human-readable tokens, the state loops internally, providing a continuous thought.

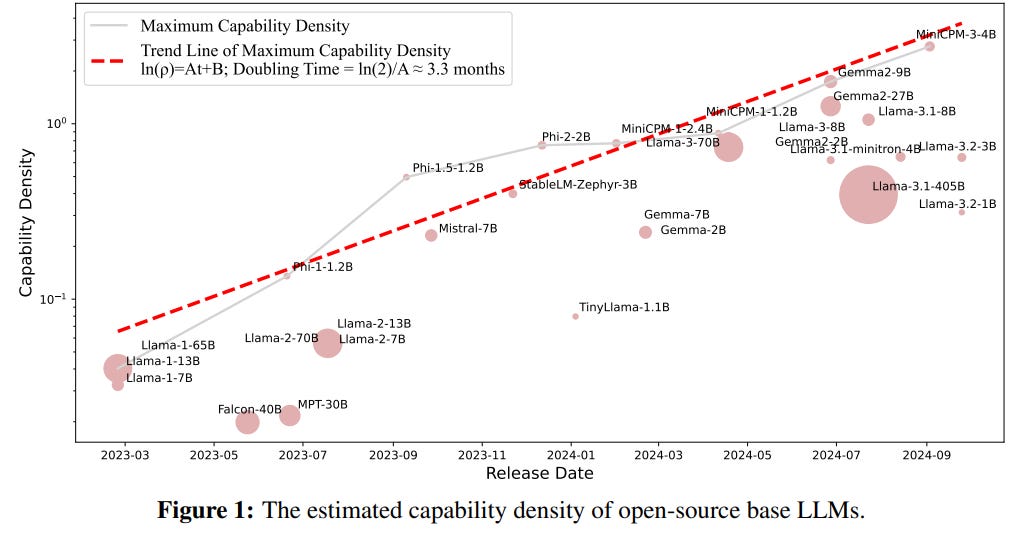

- New preprint considers how “capability density” is increasing over time: Densing Law of LLMs. They find that, for a given task, every 3 months the model size needed to accomplish it is halved. This shows that hardware scaling is not the only thing leading to consistent improvements.

LLM

- Meta released Llama 3.3 70B, which achieves similar performance to Llama 3.1 405B. Meta also announced plans for a 2GW datacenter in Louisiana, for future open-source Llama releases.

- Ruliad introduces Deepthought 8B (demo), which claims good reasoning for the model size.

- Stephen Wolfram released a post about a new Notebook Assistant that integrates into Wolfram Notebooks. Wolfram describes this as a natural-language interface to a “computational language”.

- GitIngest is a tool to “turn codebases into prompt-friendly text”. It will take a github repository, and turn it into a text document for easy inclusion into LLM context.

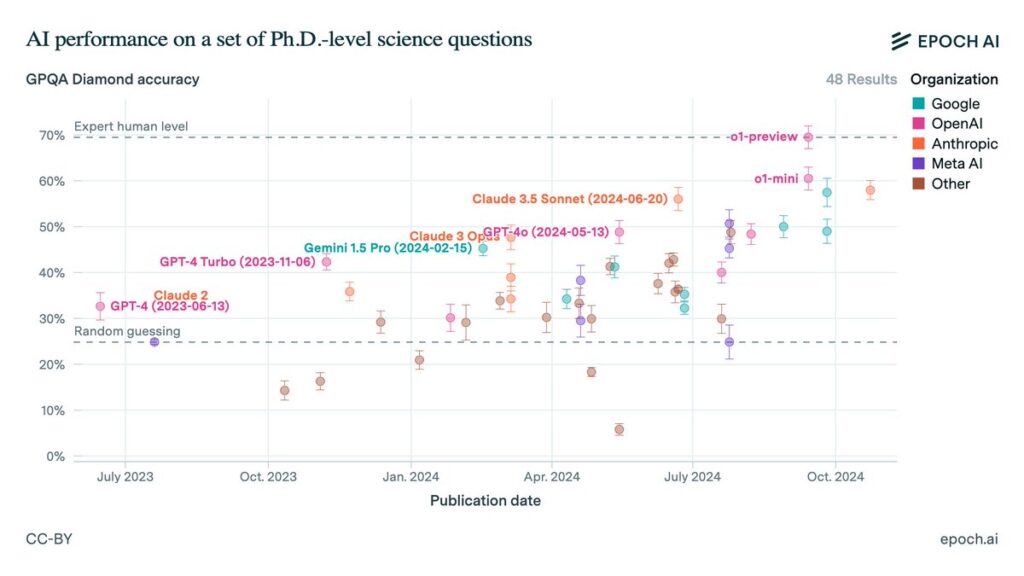

- While we haven’t seen a “new class of model” (bigger/better than GPT4) in quite a while, it’s worth remembering the substantial improvements we’ve seen from perfecting the existing systems (from Epoch AI benchmarks). On Ph.D.-level Q&A, over the last year we’ve gone from no-better-than-random to roughly human-expert:

AI Agents

- Article: Emergence’s AI orchestrator launches to do what big tech offerings can’t: play well with others. Of course there are many other scaffolding (LangChain, Pydantic, Flow, etc.) and orchestration (ell, swarm, AG2, etc.) frameworks (not to mention commercial attempts thereof: Amazon, Crew AI, MultiOn, etc.). But it’s good to see more development in this space.

Audio

- ElevenLabs added GenFM to their web product: you can now generate AI podcasts, and listeners can tune in on the ElevenReader app.

Image Synthesis

- Spawning AI is developing an image model based only on public domain data. It will be made available on Source.Plus. Preliminary images seem quite good (examples), suggesting that public data may be enough. Preprint: Public Domain 12M: A Highly Aesthetic Image-Text Dataset with Novel Governance Mechanisms.

- Midjourney releases Patchwork, a multi-player world-building tool.

Vision

- Nvidia introduces: NVILA: Efficient Frontier Visual Language Models.

3D

- Monumental Labs is using AI-enabled robotic stone carving to make Renaissance-style sculpture more common.

Science

- Nature writeup: Virtual lab powered by ‘AI scientists’ super-charges biomedical research: Could human–AI collaborations be the future of interdisciplinary studies? Preprint: The Virtual Lab: AI Agents Design New SARS-CoV-2 Nanobodies with Experimental Validation. They use a team of AI assistants to accelerate work.

- ORGANA: A Robotic Assistant for Automated Chemistry Experimentation and Characterization (video).