General

- Existential Risk and Growth (by Philip Trammell and Leopold Aschenbrenner). Their model challenges the conventional wisdom that slowing down AI development reduces existential risks; they suggest in some cases it actually increases risks. (Similar to the overhang arguments.)

- Apple picks Google’s Gemini to run AI-powered Siri coming this year (statement from Google).

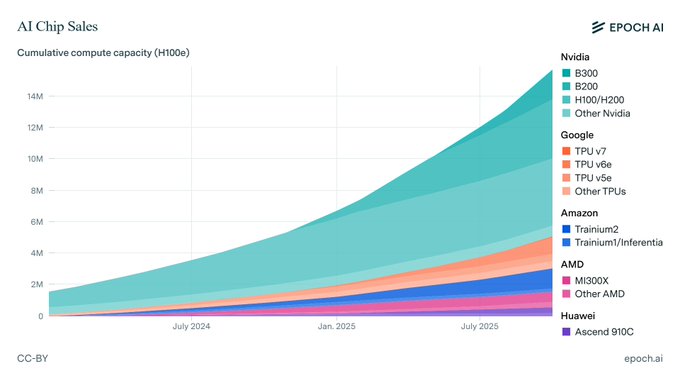

- Epoch AI updates: AI Chip Sales.

- Anthropic updates their economics work:

- ARC-AGI-3 Toolkit released. The competition launches on March 25 2026, and the benchmark requires AIs to successfully solve video-game like tasks/puzzles.

Education

Research Insights

- Recursive Language Models. Treats context as a tool-use problem; the model can iteratively probe/query/search through the full context history to pull out relevant information into the current context window.

- DiffThinker: Towards Generative Multimodal Reasoning with Diffusion Models (project, code). Treats visual reasoning as an image-to-image diffusion task.

- Pruning as a Game: Equilibrium-Driven Sparsification of Neural Networks. Trained networks can be significantly compressed; 90% reduction in size with minimal (<0.5%) decrease in performance. This approach improves over other pruning methods, by introducing the idea of parameters “competing” with each other for representation in the equilibrium pool.

- The Bayesian Geometry of Transformer Attention. Suggests that transformers are not just approximating Bayesian inference, but that this is actually the computational primitive that attention is implementing.

- Do Large Language Models Know What They Are Capable Of? The paper reports that LLMs currently have low ability to predict their own capabilities/limitations.

- From Entropy to Epiplexity: Rethinking Information for Computationally Bounded Intelligence.

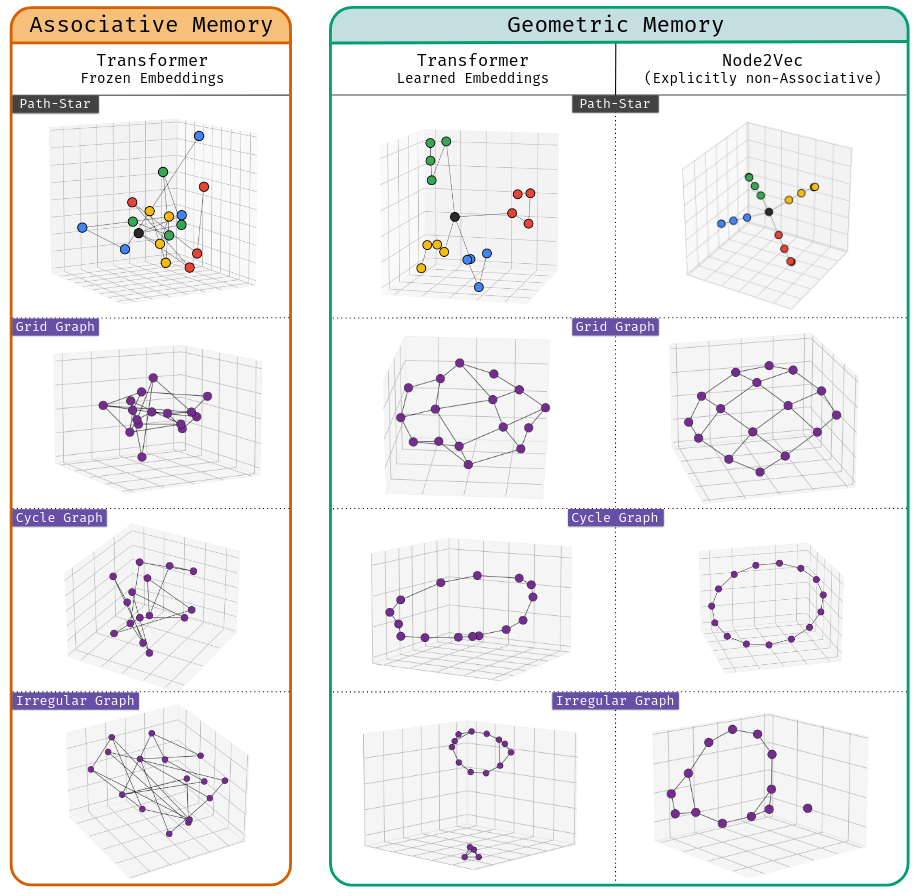

- A number of results advancing the state-of-the-art in LLM context handling and memory:

- Recursive Language Models. Treats context as a tool-use problem; the model can iteratively probe/query/search through the full context history to pull out relevant information into the current context window.

- Nvidia: Reimagining LLM Memory: Using Context as Training Data Unlocks Models That Learn at Test-Time. They claim that this effectively compresses context into weights at inference time.

- Entropy-Adaptive Fine-Tuning: Resolving Confident Conflicts to Mitigate Forgetting.

- SimpleMem: Efficient Lifelong Memory for LLM Agents.

- MemRL: Self-Evolving Agents via Runtime Reinforcement Learning on Episodic Memory.

- Conditional Memory via Scalable Lookup: A New Axis of Sparsity for Large Language Models.

- Active Context Compression: Autonomous Memory Management in LLM Agents.

- The AI Hippocampus: How Far are We From Human Memory?

- Reasoning Models Generate Societies of Thought.

- Anthropic: The assistant axis: situating and stabilizing the character of large language models (preprint).

LLM

- OpenAI adds a dedicated Health capability to ChatGPT.

- Anthropic similarly adds health-specific features: Advancing Claude in healthcare and the life sciences.

- Anthropic introduces Cowork: Claude Code, applying the agentic Claude Code behavior to more general tasks. Initially only available on Mac OS to Max subscribers.

- Google launches Universal Commerce Protocol (UCP) to standardize the way agents interact with commerce.

- Google adds: Gemini introduces Personal Intelligence. Draws from Gmail, Google Calendar, etc.

- Anthropic adds Excel support for Claude.

- Arcee AI releases open-source Trinity Large 400B.

- Google integrates Gemini into Chrome.

AI Agents

- Towards a science of scaling agent systems: When and why agent systems work.

- An interesting turn of events:

- Peter Steinberger created clawdbot (a pun on “Claude Bot”); an AI Agent given broad autonomy over their human’s resources. The tool proved immediately incredibly powerful, addictive to use, and dangerous (from a computer security perspective).

- Anthropic requested a name change; the project rebranded as moltbot, and then as OpenClaw.

- A separate group then launched moltbook, a Reddit-like social media platform only for AI agents. Within a few days, >30,000 agents had signed up, forming sub-communities and discussing diverse topics. Another day and the registration was at 1.5M agents having generated 230,000 comments.

- There are many unknowns (to what extent are viral examples of AI posting actually human nudges), and of course all the AI behaviors are downstream of being trained on Internet forum data and being prompted by their human owner to engage in the site. Nevertheless, there is reason enough to find this quite interesting.

AI Safety

- Nature publication: Training large language models on narrow tasks can lead to broad misalignment (c.f. preprint 2025-02).

- Science publication: How malicious AI swarms can threaten democracy: The fusion of agentic AI and LLMs marks a new frontier in information warfare (preprint).

Image Synthesis

- Midjourney release their new anime image model: Niji v7.

Video

- Runway Gen-4.5 running on Nvidia Rubin.

- LTX-2 video open source model, delivering 20s clips at 4k resolution, with audio (examples).

- Krea teases realtime video editing.

- Luma unveils Ray3.14 (faster generation, lower cost, higher resolution).

3D

World Synthesis

- Google begin opening access to Project Genie: generative worlds.

Science

- Probing Scientific General Intelligence of LLMs with Scientist-Aligned Workflows.

- Scientific production in the era of large language models. AI increases productivity of scientists (as imperfectly measured by paper output).

- Universally Converging Representations of Matter Across Scientific Foundation Models. Shows that science models converge to a common molecular representation. (C.f. convergent representation for language.)

- A multimodal sleep foundation model for disease prediction. AI can predict 130 diseases from one night of sleep data.

- SciSciGPT: advancing human–AI collaboration in the science of science.

- Deep contrastive learning enables genome-wide virtual screening (tool).

- AI has begun to make genuine discoveries. One recent spate of examples is developing solutions to some of the Erdős problems.

- Erdős Problem #728 and #729 solved by Aristotle using ChatGPT 5.2 Pro

- Erdős Problem #397 solved by Neel Somani using ChatGPT 5.2 Pro.

- Erdős Problem #205 solved by Aristotle using ChatGPT 5.2 Pro

- Google updates their medical foundation model to MedGemma 1.5: Next generation medical image interpretation with MedGemma 1.5 and medical speech to text with MedASR.

- Why LLMs Aren’t Scientists Yet: Lessons from Four Autonomous Research Attempts.

- Interval cancer, sensitivity, and specificity comparing AI-supported mammography screening with standard double reading without AI in the MASAI study: a randomised, controlled, non-inferiority, single-blinded, population-based, screening-accuracy trial.

Hardware

- Nvidia announces its next-generation AI platform: Rubin.

- Razer Project Ava is a desktop object, powered by Grok, that displays an avatar and can help/coach/etc.

Robots

- Boston Dynamics updates about their upgraded Atlas design. The robot has joints with large rotational freedom, allowing inverting the work direction quickly and easily.

- 1X updates on the 1X World Model that underlies their Neo robot, claiming improved self-learning. The system predicts task execution by essentially running a video model to predict future states and results of its actions.

- Fauna Robotics announces Sprout, a cute humanoid intended to work around humans. Intended as a development platform.

- Figure announces Helix 02 model. Good whole-body control during task execution.