General

Research Insights

- Meta: Provable Scaling Laws of Feature Emergence from Learning Dynamics of Grokking.

- MIT, Harvard, Google DeepMind: Why Can’t Transformers Learn Multiplication? Reverse-Engineering Reveals Long-Range Dependency Pitfalls. They explain why transformers, by default, fail at this task; and how to fix it. By training a model on the full step-by-step logic, and progressively removing steps, it was forced to learn this logic internally.

- Less is More: Recursive Reasoning with Tiny Networks (blog). A small (7M) network is able to out-reason larger systems, exploiting two recursive networks. This small model is optimized to handle a certain class of puzzle; thus it cannot handle general tasks (or any language task) like an LLM. But the work demonstrates that a small iterative system can deploy remarkably strong “reasoning” effort.

- Inoculation Prompting: Instructing LLMs to misbehave at train-time improves test-time alignment. Counterintuitive result: train model to misbehave. By explicitly training on labeled bad behavior, the model recognizes it as distinct from desired behavior, so that when you prompt for desired behavior, the unintended bad behavior is not produced. This can be used to avoid reward hacking.

OpenAI

Agents

Video

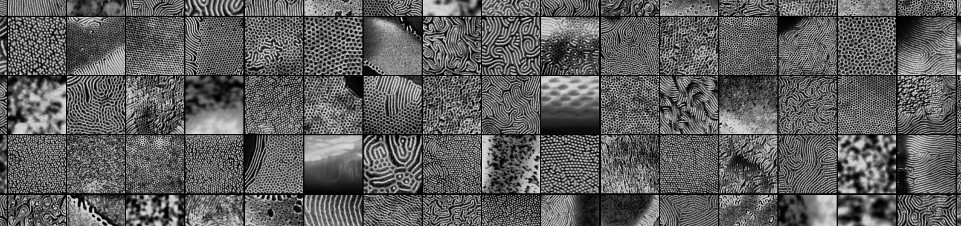

- Wired: The Future of AI Isn’t Just Slop. Behold Neural Viz, the first great cinematic universe of the AI era. It’s from a guy named Josh. (NeuralViz YouTube channel)

Science

- AI for Scientific Discovery is a Social Problem.

- Periodic Labs launches with the goal of creating an AI Scientist.

- Microsoft: Strengthening nucleic acid biosecurity screening against generative protein design tools.

Robots

- Figure announces Figure 03 robot.

- DEEP Robotics announces DR02, an “all weather” humanoid.