Let’s pull together some information (as of 2024-08-16):

- Modern LLMs can generate highly coherent text, and in some sense have quietly surpassed the famous Turing Test. This has been evaluated, with GPT-4 caliber systems broadly passing the test.

- In March 2023, there was brief online debate about whether these videos feature a human or an AI avatar: video 1, video 2.

- Is the answer obvious to you? There are details that make it look fake (e.g. fuzziness between hair/background, unphysical hair motion, blurriness around nose-ring). Conversely other aspects (hands, mannerisms) seem too good to be AI-generated. And one must be on the lookout for an intentional fake (human acting/voicing strangely on purpose, intentionally adding visual artifacts, etc.).

- The answer, it seems is that this is a deepfake (made using Arcads) wherein the user provides a video, and then the voice is replaced and mouth movements synced to the new audio. So it is normal human-actor video, with AI audio and lip-synch. Not AI-generated from scratch.

- Of course, the deepfake implications are obvious, since there is plenty of video of notable people to draw from. E.g. here’s an Obama deepfake made using Argil.

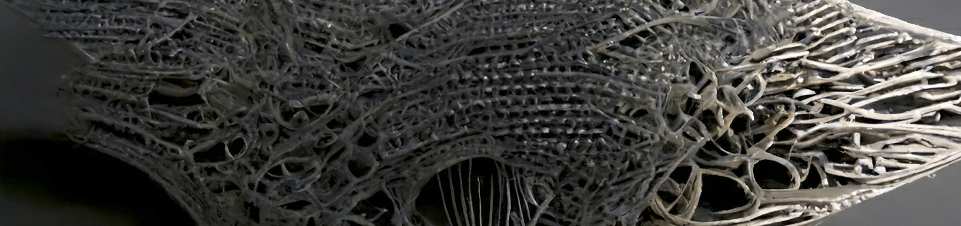

- In August 2024, this image (and corresponding video) were presented as an example of genAI that a casual observer would initially assume to be real.

- In August 2024, the 𝕏 account 🍓🍓🍓 (@iruletheworldmo) began spreading rumors about upcoming OpenAI releases (related to Q*/Project-Strawberry, GPT-5, forthcoming AGI, UBI, etc.). It grew a large following (30k followers in two weeks), despite only one of its many outlandish predictions being validated. (The account mentioned SWE-Bench Verified three days before the official announcement.)

- This sparked rumors that this account was actually an AI (e.g. OpenAI test of agentic system, or a marketing firm demonstrating engineered hype-based follower growth) or even a test of a next-generation model (e.g. GPT-5).

- Although the evidence for these claims is weak, the fact that it is not easy to rule out is also telling.

- On the evening of 2024-08-15, there was an 𝕏 spaces meetup wherein various users voice-chatted with Lily Ashwood (@lilyofashwood). The discussion centered on figuring out whether Lily was human or AI (clip, full recording). Her responses seemed at times to draw upon remarkably encyclopedic knowledge, her voice was measured and slightly stilted, and her interactions were occasionally strange. These all point to her being a language/voice model. But at other times, her jokes or creative responses were surprisingly human-like. Was this truly an AI-model, or a human mimicking TTS speaking style (and using an LLM to come up with AI-like responses)? The discussion space was surprisingly split in opinion.

- New paper: Personhood credentials: Artificial intelligence and the value of privacy-preserving tools to distinguish who is real online.

- It is becoming increasingly difficult to distinguish human from synthetic. Captcha tests are now often solvable by automated systems. And genAI photo/voice/video is now sufficiently convincing that it will be taken as genuine at first glance.

- They propose personhood credentials, that could be generated by a trusted authority (e.g. government) using cryptography. This would allow a person to demonstrate they are a particular person, without revealing exactly who they are, in various online interactions.

Overall, the ability to distinguish human from AI in an online setting is becoming challenging; especially in cases where a human can intervene where necessary to maintain the ruse.

Update 2024-09-01

- A video from the Beijing World Robotics Congress, showing a variety of cyberpunk female-coded robots moving with surprising smoothness spurred surprise.

- It turns out that the robots in the background are animatronics, the robots in the foreground are human actors made up to look like robots. Behind-the-scenes videos show applying makeup and posing for the camera. The act is partially sold by the actors moving in slightly inhuman ways.

- 1X unveiled a video of their new Neo robot. The fabric cladding and remarkably smooth movement led many people to say that this looks like a person in a costume (not unlike the first teaser for the Tesla Optimus robot).

- In this case, it appears to be a genuine robot. One can see more examples of this robot’s motion in other videos: doing chores, making coffee, walking slowly, technical discussion.

- In the past, some websites (e.g. Reddit) have used photo verifications (e.g. holding handwritten note) to confirm someone is human. But now, AI-generated photo and even video verifications are quite good: example 1, example 2.

- AI avatars, which map a human performance onto a synthetic character, are also rapidly improving: example 1, example 2. While these currently require some effort, the automated and real-time versions are also rapidly improving. (E.g. Deep-Live-Cam, example.)