Multi-Label Visual Feature Learning with Attentional Aggregation

Citation

Guan, Z.; Yager, K.G.; Yu, D.; Qin, H. "Multi-Label Visual Feature Learning with Attentional Aggregation"

Applications of Computer Vision (WACV) 2020,

1 1–9.

Summary

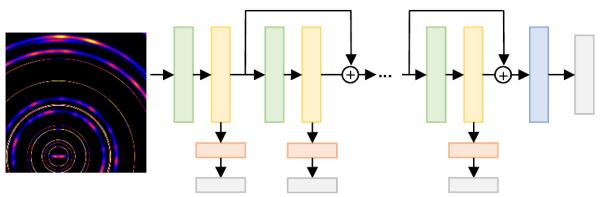

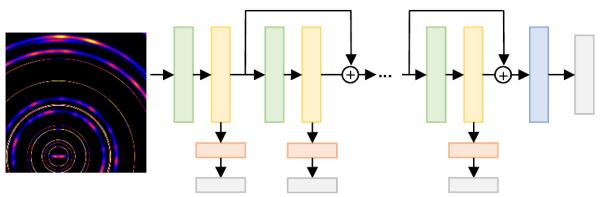

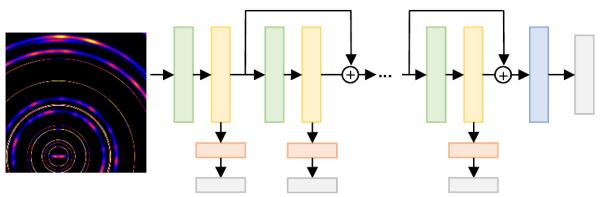

We add attentional modules to a deep neural network to improve its ability to recognize features in x-ray scattering detector images.

Abstract

Today convolutional neural networks (CNNs) have reached out to specialized applications in science communities that otherwise would not be adequately tackled. In this paper, we systematically study a multi-label annotation problem of x-ray scattering images in material science. For this application, we tackle an open challenge with training CNNs --- identifying weak scattered patterns with diffuse background interference, which is common in scientific imaging. We articulate an Attentional Aggregation Module (AAM) to enhance feature representations. First, we reweight and highlight important features in the images using data-driven attention maps. We decompose the attention maps into channel and spatial attention components. In the spatial attention component, we design a mechanism to generate multiple spatial attention maps tailored for diversified multi-label learning. Then, we condense the enhanced local features into non-local representations by performing feature aggregation. Both attention and aggregation are designed as network layers with learnable parameters so that CNN training remains fluidly end-to-end, and we apply it in-network a few times so that the feature enhancement is multi-scale. We conduct extensive experiments on CNN training and testing, as well as transfer learning, and empirical studies confirm that our method enhances the discriminative power of visual features of

scientific imaging.