What is the future of AI in science? I propose that the community should work together to build an exocortex—an expansion to a researcher’s cognition and volition made possible by AI agents operating on their behalf.

The rise of large language models (LLMs) presages a true paradigm shift in the way intellectual work is conducted. But what will this look like in practice? How will it change science?

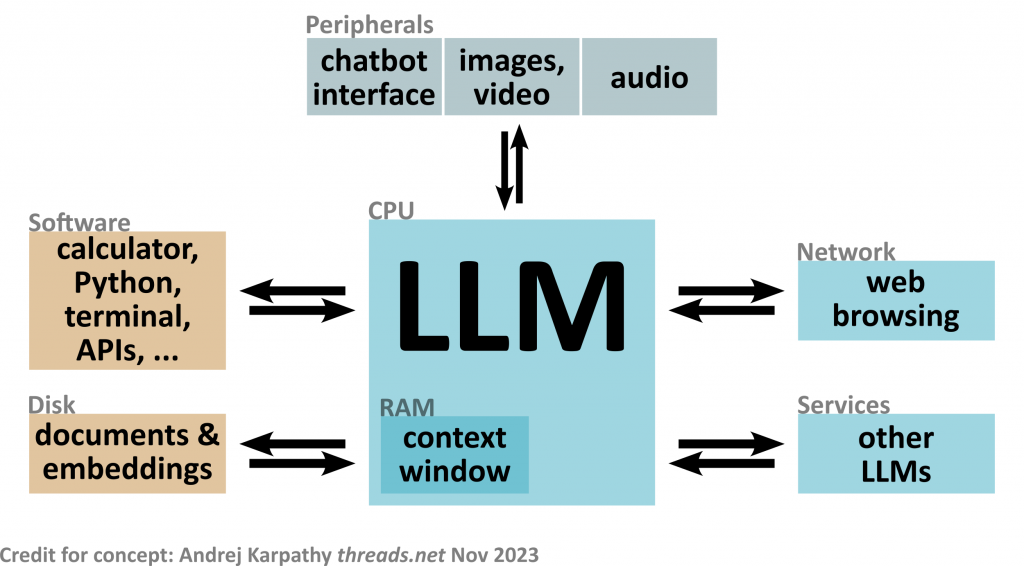

LLMs are often used as chatbots, but that perhaps misses their true potential, which is as decision-making agents. Andrej Karpathy (1,2) thus centers LLMs as the kernel (orchestration agent) for a new kind of operating system. The LLM triggers tools and coordinates resources, on behalf of the user.

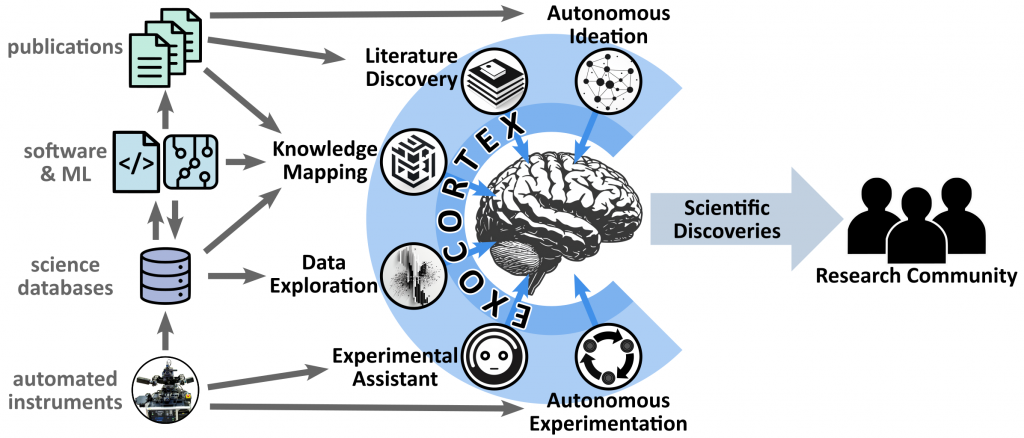

In the future, every person might have an exocortex: a persistently-running swarm of AI agents that work on their behalf, thereby augmenting their cognition and volition. Crucially, the AI agents do not merely communicate with the human; they talk to each other, solving complex problems through iterative work, and only surfacing the most important results or decisions for human consideration. The exocortex multiplies the human’s intellectual reach.

A science exocortex can be built by developing a set of useful AI agents (for experimental control, for data exploration, for ideation), and then connecting them together to allow them to coordinate and work on more complex problems.

Here is a paper with more details: Towards a Science Exocortex Digital Discovery 2024 doi: 10.1039/D4DD00178H (originally posted to arXiv).

The exocortex is obviously speculative. It is a research problem to identify the right design, build it, and deploy it for research. But the potential upside is enormous, in terms of liberating scientists from micro-managing details, allowing them to focus on high-level scientific problems; and correspondingly for massively accelerating the pace of scientific discovery.

Here is an AI-generated podcast discussing my exocortex paper (made using Google’s Notebook LM).