Research Insights

- Preprint: JPEG-LM: LLMs as Image Generators with Canonical Codec Representations. They test what happens if you train a language model (default llama architecture) on the data-stream of an image file (e.g. JPEG) or even video. The LLM is indeed able to learn the relevant structure, without any vision-specific modifications to the strategy (indeed out-performs some more tailored approaches). This again highlights the universal modeling capability of the transformer, and bolsters the argument for naively generating multi-modal models by training on various data types.

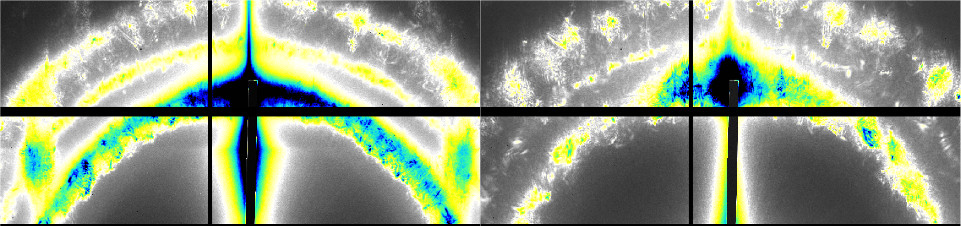

- Preprint: Factorized-Dreamer: Training A High-Quality Video Generator with Limited and Low-Quality Data.

LLMs

- Anthropic added a dedicated “take screenshot” button to the Claude interface.

- Nous Research released Hermes 3 open-source (8B, 70B, 405B). See also technical report.

- Salesforce AI released new open multi-modal models: xGen-MM (BLIP-3). Preprint and code available.

- Anthropic released some resources for people to learn improved prompting: a real world prompting course and interactive tutorial.

AI Agents

- Preprint: Automated Design of Agentic Systems. There are many ongoing efforts to design agentic systems on top of LLMs. The paper pushes on the idea that the design itself should be treated like an automated ML task (code).

- Some related prior work:

- TextGrad: Automatic “Differentiation” via Text (gradient back-propagation of text through LLM to optimize it).

- Symbolic Learning Enables Self-Evolving Agents (AI automation of LLM workflow optimization).

- EvoAgent: Towards Automatic Multi-Agent Generation via Evolutionary Algorithms.

- Some related prior work:

Policy

- OpenAI released a blog post: Disrupting a covert Iranian influence operation (c.f. post from Feb 2024 similarly talking about shutting-down malicious uses of OpenAI tech).

Image Synthesis

- Freepik has begun teasing a new image generator, called Mystic (threads of examples: 1, 2). Quality seems good; though early previews tend to be cherry-picked. The speculation is that this is essentially Flux images run through the Magnific AI upscaler.

- Ideogram released v2.0. Improved image quality (showcased images), including text within images.

- The Midjourney web interface is now available to everyone.

Video

- New generative video method: FancyVideo: Towards Dynamic and Consistent Video Generation via Cross-frame Textual Guidance. They try to improve video generation through better temporal and inter-frame guidance. The results look good considering the small-scale test.

- Tavus have a demo of a video-call AI agent named Carter (short-duration demo available on site; example conversation here). Not indistinguishable from a human (though getting closer!), but probably enough for a some meaningful use-cases.

- Hedra released an update, allowing to stylize a video (while maintaining the person/character), and general improvements to their AI avatar model.

- Luma announced a v1.5 update to Dream Machine.

- Hotshot announced a new text-to-video model. Seems competitive with current models (examples, more examples).

- LTX Studio (AI filmmaking) is now available to all.

Vision

- Segment Anything with Multiple Modalities. Extends the “Segment Anything Model” (SAM) approach to more than just 3-channel (RGB) data. I.e. images might have a depth channel or thermal data, or some other modality. This allows segmentation into other modalities.

Brain

- Paper: The brain simulates actions and their consequences during REM sleep. Computer science folks can’t help but draw an analogy to ML (“the brain finetunes on synthetic data while it sleeps”).

- A related hypothesis from 2021: The overfitted brain: Dreams evolved to assist generalization (and “The overfitted brain hypothesis”). So dreams/simulation increase the diversity of training examples, avoiding overfitting to the more limited amount of real data. (Crucially, this suggests that ML simulated data should try to be slightly out-of-distribution.)

- Chomsky and others have claimed that LLMs don’t model language, and would respond similarly to a real or an “impossible” language. A new paper tests this: Mission: Impossible Language Models. Contrary to Chomsky et al.’s intuition, they find that LLMs respond differently to real and impossible languages (that humans could not learn), and find that LLMs also struggle to learn impossible language. The results suggest that LLM training has some analogies to human language understanding; and that LLMs build meaningful language models.

Science

Robots

- Astribot released a teaser and launch video for the unveiling of their new S1 robot (humanoid upper-body on wheeled base). The video shows examples of cooking, serving tea, playing a musical instrument, and throwing a ball into a hoop.

- Zhejiang Humanoid Robot Innovation Center are working on a humanoid; video of NAVIAI.

- Unitree’s latest video shows their G1 humanoid behaving quite nimbly and athletically. This is similar to (or better than) what we saw in the Boston Dynamics update to their electric Atlas (clip from presentation). However, Unitree claims that the displayed hardware is the mass-production design that will sell for just $16,000.