General

- Investment in generative AI:

- Microsoft, BlackRock and the UAE have formed GAIIP (Global Artificial Intelligence Infrastructure Investment Partnership). The plan is to invest $100 billion in datacenters and power (mainly inside the United States).

- Microsoft is, in particular, pledging to buy power from the Three Mile Island nuclear facility; so this site will have a new reactor operating by 2028. (C.f. Microsoft using genAI to write nuclear regulatory documents.)

- Google is planning on using small modular nuclear reactors for future >1 GW data centers (video).

- OpenAI had another funding round, securing an additional $6.5 billion; while also turning down a large number of potential investors.

- OpenAI pitched the White House on a buildout of US power/compute, including 5 GW data centers.

- These are strong signals that investment in AI is not slowing down.

- Hiring for AI Agents:

- OpenAI is hiring people to work on multi-agent research (application here). This is no surprise, since agentic systems are part of OpenAI’s strategy of progressively-improving intelligence. (C.f. 5 levels: conversational, reasoning, autonomous/agentic, innovating.)

- Google is hiring for multi-agent AGI.

- OpenAI announces OpenAI Academy, to help developers in low- and middle-income countries develop AI products.

- OpenAI CTO Mira Murati announced her departure. (Sam Altman wishes her well, and notes that leadership changes are to be expected; especially for demanding roles in fast-paced organizations.)

- Sam Altman publishes a personal blog post: The Intelligence Age.

Research Insights

- Google DeepMind release: Training Language Models to Self-Correct via Reinforcement Learning. They demonstrate training a model to exhibit improved self-corrective behavior. (Is OpenAI doing something similar as part of o1?)

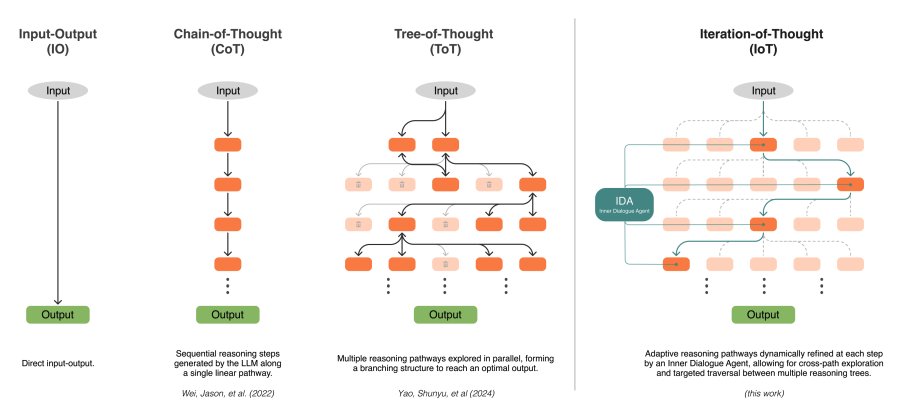

- Iteration of Thought: Leveraging Inner Dialogue for Autonomous Large Language Model Reasoning. Exploits conversation between a process that generates context-specific prompts, and a process that refines.

- To CoT or not to CoT? Chain-of-thought helps mainly on math and symbolic reasoning. The claim is that many scenarios show little improvement when chain-of-thought is added.

- Of course, it may well still be true that some kind of iterative/search at inference time adds value. However, the implication may be that the specific kind of iteration should depend on this problem. This is nominally consistent with the observation that OpenAI o1 improves performance on some problems, but not all problems.

- Rephrase and Respond: Let Large Language Models Ask Better Questions for Themselves (discussion/explanation here). This method allows the LLM to rephrase the prompt before answering, thereby increasing the chance of high-quality response. It is a different flavor of iterative self-prompting. Like many iteration schemes, by allowing the LLM more time to reason over the input, the output quality should improve.

- LLMs Still Can’t Plan; Can LRMs? A Preliminary Evaluation of OpenAI’s o1 on PlanBench. They find that OpenAI’s o1 is remarkably better at planning than prior LLMs. On the 2022 PlanBench Blocksworld metric, it reaches 98% (prior best was 63% from LlaMA 3.1 405B). This suggests we may now be entering an era of Large Reasoning Models (LRMs).

- Minstrel: Structural Prompt Generation with Multi-Agents Coordination for Non-AI Experts. They propose LangGPT, a structured “programming language” for prompts, to make prompts more rational and reusable. And a multi-agent setup is used to generate/optimize prompts.

- Larger and more instructable language models become less reliable. The paper notes that models become better as they are scaled up; though this may not be uniform (improving lots in some tasks while little in others). The responses of larger models can be harder to assess, however, with errors harder to identify (but responses more confident and seemingly better). Overall, this makes larger models less reliable, in terms of human assessment of what they can do.

- The frontier of AI (and the boundary of human-vs-artificial intelligence) is often described as a “jagged frontier” since intelligence manifests in many ways. AIs may exceed humans in some ways, while being worse in other ways. As models get better, their skills do not improve uniformly. This new paper helps elucidate some of the distinct skills of current AI.

LLM

- With the growing popularity of Chatbot Arena for ranking LLMs, lmsys.org have decided to spin it off as a separate site: lmarena.ai with its own blog.

- Anthropic released a blog post: Introducing Contextual Retrieval. They talk about retrieval augmented generation (RAG) best practices, noting how contextual methods can greatly improve performance. Thus, each document chunk that is stored, is also stored alongside a small description of how that chunk “fits in” to the total document context. They provide a code examples.

- PearAI is an open-source LLM+IDE solution. (C.f. Void Code Editor is another open-source competitor, to the currently-dominant Cursor.)

- Anysphere (creators of Cursor) have released their prompt design strategy.

- Google released two new production Gemini models, at lower prices and higher rate limits.

- OpenAI announced that Advanced Voice Mode will (finally) be rolling out to ChatGPT Plus users, over the next week.

- Meta announced Llama 3.2, which includes voice and visual modalities.

- Ai2 announced Molmo open multimodal models.

Tools

- Stream of Thought to Text is an interactive voice transcription, where you can also use natural language to edit what’s been written. It exploits Groq, so is incredibly fast. This is just a demo, but it does work remarkably well.

- xRx is a framework for building AI applications (code), made by 8090 and Groq.

Audio

- MuCodec: Ultra Low-Bitrate Music Codec (code). Compression music audio using a combination of acoustic and semantic features. Seems to set a record in terms of quality at a given bit-rate.

- Google’s Notebook LM “generate podcast” feature has rightly been getting praise. Now an open-source implementation of the idea: PDF2Audio (code).

Image Synthesis

- Colorful Diffuse Intrinsic Image Decomposition in the Wild (preprint). Can decompose an image into abledo, diffuse shading, and non-diffuse (specular) residual.

- StableDelight: Revealing Hidden Textures by Removing Specular Reflections (builds upon earlier work estimating surface normals).

- Since surface normals and specular/diffuse components be identified/removed, it would seem “easy” to allow one to tune them arbitrarily (including over-emphasizing, remixing just one channel, etc.). This provides yet another genAI image-editing tool.

Video

- Integration of genAI video tools into existing video FX software (e.g. Nuke) is proceeding. This shows an example of director an actor’s facial expression using a genAI node in Nuke (ComfyUI-for-Nuke).

- This week’s batch of decent AI video generations:

- Sora used to make a sort of weird dreamlike video.

Science

- Prithvi WxC: Foundation Model for Weather and Climate (code).

- A Preliminary Study of o1 in Medicine: Are We Closer to an AI Doctor?

Hardware

- Plaud NotePin is an AI wearable for note-taking (similar to Compass and Limitless). $170, useful for note-taking.

- Renewed rumors of Jony Ive working with Sam Altman to create some kind of AI-powered physical device.

- Meta announce Orion Augment Reality glasses. These are internal prototypes, for now. But it shows the direction this tech is moving.

- TSMC in Arizona is now actively making real production chips (for Apple) using 5 nm process technology. This is ahead of schedule.

Robots

- Pudu robotics is developing a semi-humanoid: D7.

- Tencent apparently has a wheeled-legged semi-humanoid (reveal video, other videos).