General

- Blog post: The Intelligence Curse: With AGI, powerful actors will lose their incentives to invest in people.

- Microsoft blog post: The Golden Opportunity for American AI.

- Microsoft to Spend $80 Billion on AI Data Centers This Year. Over half this spending will be in the US.

- Emirati billionaire Hussain Sajwani is reportedly planning to invest $20 billion in the US in data centers.

- Anthropic is raising a further $2B, at a $60B valuation

- Bloomberg interview: Sam Altman on ChatGPT’s First Two Years, Elon Musk and AI Under Trump; and Altman posts on his blog: Reflections. Altman reaffirms that agents will be developed in 2025, and they are on-track to AGI in the years following.

Research Insights

- PRIME: Process Reinforcement Through Implicit Rewards (data/models, code)

- Builds on prior work: Free Process Rewards without Process Labels.

- The basic idea is: chain-of-thought (CoT) is a useful way to improve reasoning. But how to train better CoT? You can give scores to good vs. bad chains, but then the model only gets whole-chain feedback. It would be better to know where the reasoning chain went wrong (or right). In PRIME, alongside training the LLM, they train an LLM that acts as a per-token reward model. It learns what CoT-steps are looking good vs. bad, and so can provide more fine-grained direction control.

- Differential Transformer. Explanation: The traditional transformer architecture spreads attention and can thus get distracted by noise (especially with large context). The differential architecture alters the attention equation so as to better amplify relevant context and suppress noise. This should improve retrieval and reduce hallucinations, especially for large contexts.

- Metadata Conditioning Accelerates Language Model Pre-training. Pre-pending training data with meta-data (e.g. “from wikipedia.org”), for part of the training, allows more control. Training can be more data-efficient, and inference can be more steerable (by invoking a meta-data field associated with the desired output style).

LLM

- Interesting idea to automate the ranking of LLMs (for a particular task). LLMRank (“SlopRank”) uses a set of LLMs to generate outputs, and evaluate each other. The top model can then be inferred from a large number of recommendations (from the other models), analogous to ranking pages in web-search using PageRank.

- Rubiks AI releases new Sonus-1 models, including a reasoning variant.

- CodeElo: Benchmarking Competition-level Code Generation of LLMs with Human-comparable Elo Ratings (preprint, leaderboard).

- Blog post: Can LLMs write better code if you keep asking them to “write better code”? The answers is “yes”, though the expected issues arise (prompting matters, hallucinations may occur, etc.). It does generally confirm the notion that iterative LLM work can exceed single-shot generation.

- Virgo: A Preliminary Exploration on Reproducing o1-like MLLM.

- The FACTS Grounding Leaderboard: Benchmarking LLMs’ Ability to Ground Responses to Long-Form Input.

- Microsoft open-sources (MIT license) their small-but-performant (14B) phi-4 model.

AI Agents

- Google whitepaper: Agents.

- There are now lots of AI agent orchestration frameworks. Here’s the latest addition: orchestra (docs, code).

- Agent Laboratory: Using LLM Agents as Research Assistants.

- AgentRefine: Enhancing Agent Generalization through Refinement Tuning. Tuning a system only on successful task completion is not enough; one must train in the ability to handle errors.

Video

- Fine-tuning of video models to a particular style is now starting. Examples of Hunyuan Video LoRAs.

- Nvidia’s new GeForce RTX 5090 graphics card can use neural rendering for real-time ray-tracing (where only ~10% of pixels are computed using traditional ray-tracing, and a neural model is used to interpolate from that).

World Synthesis

- Nvidia present Cosmos, a set of foundation models trained on 20 million hours of video. Intended to accelerate training (e.g. via synthetic data generation) of models for robotics, autonomous driving, industrial settings, etc.

Science

- An automatic end-to-end chemical synthesis development platform powered by large language models.

- METAGENE-1: Metagenomic Foundation Model for Pandemic Monitoring (code). A 7B foundation model trained on 1.5T DNA/RNA base pairs, obtained from wastewater.

- A foundation model of transcription across human cell types.

- Accurate predictions on small data with a tabular foundation model (code). A foundation model using in-context learning can infer missing tabular data more correctly than traditional methods.

Brain

- The Digital Twin Brain Consortium publishes: Simulation and assimilation of the digital human brain (preprint, code). They simulate 86B neurons and 48T synapses using 14k GPUs.

- Predicting Human Brain States with Transformer. The system can predict the next 5s of fMRI data from the previous 20s.

- Key-value memory in the brain. They provide some evidence that key-value style memory could be implemented biologically, and maybe even is the process of human memory retrieval. If this were true, it would imply that the limit on human memory is not storage, but retrieval (one forgets not because the memory/information is erased/over-written, but because one loses the key/pathway towards retrieving that specific memory).

Hardware

- Nvidia described their BG200 NVL72 rack-sized supercomputer: 72 Blackwell GPUs, 1.4 exaFLOPS of compute, and 130 trillion transistors. For fun, Jensen Huang showed what the corresponding compute would look like if all placed on a single wafer as a superchip, though that is not how it is actually manufactured or used.

- Nvidia announces $3,000 personal AI supercomputer called Digits, which uses a GB10 superchip. A single unit can run a 200B model; linking two should allow one to run 405B models.

Robots

- OpenDriveLab and AgiBot-World release a large-scale robotics dataset: 1M trajectories from 100 real-world scenarios and 100 robots.

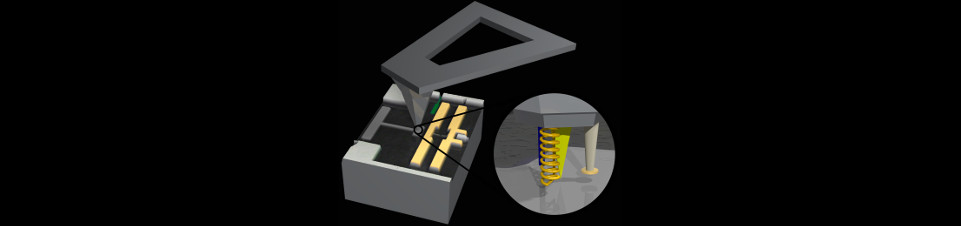

- Nvidia describes Isaac GR00T Blueprint to accelerate robotics development.