General

- Richard Sutton and David Silver publish an essay: Welcome to the Era of Experience. They argue that scaling AI on human data (pre-training) is hitting diminishing returns. The resurgence of reinforcement learning presages a shift towards experiential learning, where agents will need to act in the world to self-train.

- Anthropic publishes: Values in the wild: Discovering and analyzing values in real-world language model interactions (paper). In real conversations with users, Claude broadly adheres to the values intended by Anthropic. This approach can be used to help confirm alignment after deployment.

- About a month ago, METR released a paper analyzing scaling of AI capabilities: Measuring AI Ability to Complete Long Tasks. Several follow-up analyses agree that the extrapolated trend suggests rapid improvements (though there are large uncertainties).

- Dwarkesh Patel: Questions about the Future of AI.

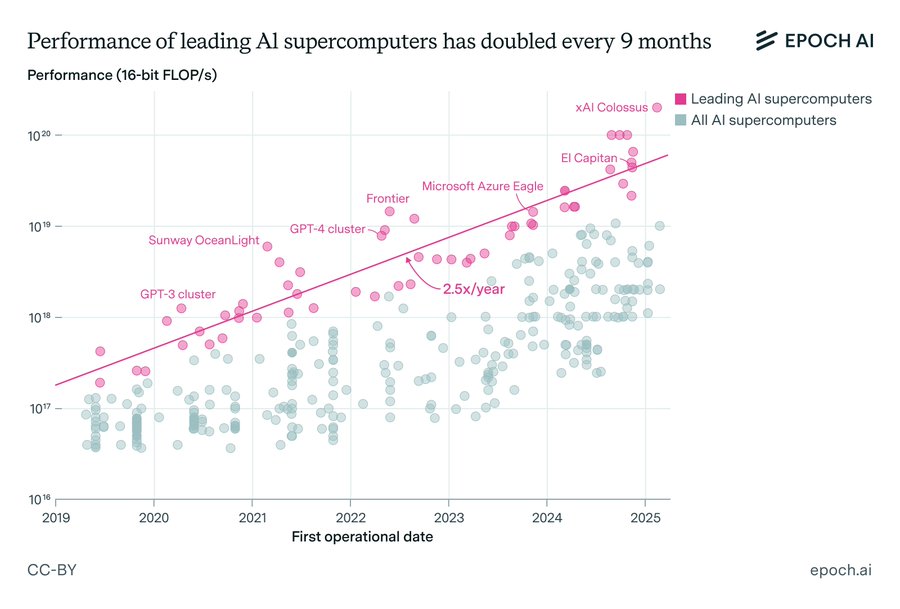

- Epoch AI releases a report on: Trends in AI Supercomputers.

Research Insights

- Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model? Results suggest that the base model already has reasoning capabilities. RL allows one to elicit these, but does not generate this capability per se. This is intuitive in the sense that base models can be manually used to do chain-of-thought through prompt engineering or re-prompting. RL thus allows a more efficient version of this behavior to be elicited.

- Sleep-time Compute: Beyond Inference Scaling at Test-time. One can pre-compute a set of plausible questions/answers offline. By pre-computing useful components of user queries, inference-time compute can be saved.

- Artificial intelligence and dichotomania. LLMs exhibit humanlike biases regarding interpreting statistical significance. This acts as further confirmation that pretraining is building models that implicitly simulate human behavior.

- NEMOTRON-CROSSTHINK: Scaling Self-Learning beyond Math Reasoning. They improve generalized reasoning by incorporating multi-domain, multi-format RL.

- Think Deep, Think Fast: Investigating Efficiency of Verifier-free Inference-time-scaling Methods. They find that reasoning models are superior to inference-time scaling of non-reasoning models.

LLM

- Pleias-RAG are small models (350M, 1.2B) optimized for RAG (paper).

AI Agents

- Anthropic posts: Claude Code: Best practices for agentic coding.

Audio

- Nari Labs Dia is a text-to-speech (TTS) model that can generate remarkably realistic and emotional output (example).

Video

- Skyworks releases SkyReels V2, an open-source video generator that can handle unlimited duration (examples).

- Sand AI introduces Magi-1, an open-source autoregressive diffusion video model, that also handles infinite extension (examples).

Hardware