The importance of AI agents continues to grow, which makes it mildly concerning that there is no agreed-upon definition of “AI Agent.” Some people use it to refer to any LLM activation (where “multi-agent” might then just mean chaining multiple LLM calls) whereas others reserve it for only for generally intelligent AI taking independent actions in the real-world (fully agentic). The situation is further confused by the fact that the term “agent” has been used for decades to just refer to a generic software process.

This thread tried to crowd-source a definition. The ones that resonate with me are those that emphasize memory and tool-use, reasoning, and long-running operation on general tasks. So, I offered:

AI Agent: A persistent AI system that autonomously and adaptively completes open-ended tasks through iterative planning, tool-use, and reasoning.

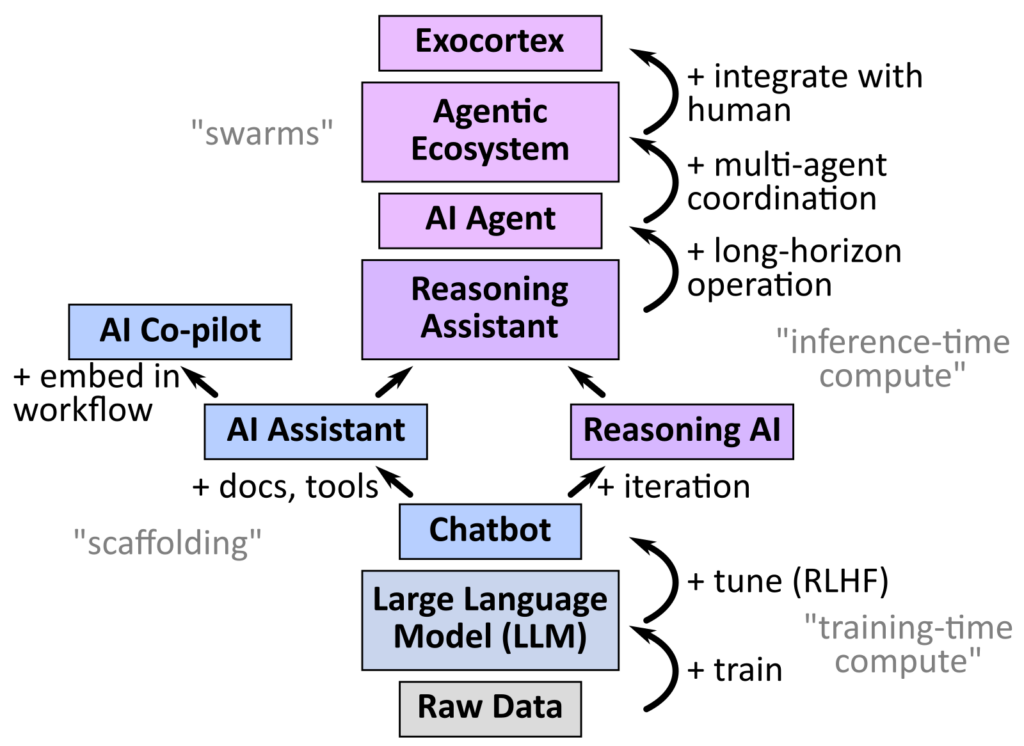

To further refine definitions:

Raw data is used to train a base model, which can be fine-tuned (e.g. into a chatbot). If we scaffold the LLM with tools (document retrieval, software APIs, etc.), we call it an AI Assistant (or a Co-pilot, if we embed it in an existing application or workflow).

We can also exploit iterative deliberation cycles (of many possible sorts) to give the LLM a primitive sort of “system 2” reasoning capability. We can call this a Reasoning AI (such systems are rare and currently primitive, but OpenAI o1 points in this direction). A Reasoning Assistant thus combines iteration with scaffolding.

An AI Agent, then, is a reasoning AI with tool-use, that runs for a long-horizon so that it can iteratively work on complex problems.

Beyond that, we can also imagine multi-agent ecosystems, which work on even more complex tasks by collaborating, breaking complex problems into parts (for specialized agents to work on), and combining results. Finally (and most ambitiously), we can imagine that this “swarm” of AI agents is deeply integrated into human work, such that it feels more like an exocortex.