General

- Experts were asked to evaluate Deep Research products: These experts were stunned by OpenAI Deep Research. OpenAI’s offering was found superior to Google’s. Overall, the reports (generated in <20 minutes) were judged as having saved hours of human effort.

- Ethan Mollick: A new generation of AIs: Claude 3.7 and Grok 3. Yes, AI suddenly got better… again.

- Amazon Alexa devices will be upgraded to use Anthropic Claude as the AI engine. It will be called Alexa+, and is being rolled out over the coming weeks.

Research Insights

- Surprising result relevant to AI understanding and AI safety: Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs. By fine-tuning an LLM to produce insecure code, the LLM also incidentally picks up many other misaligned behaviors, including giving malicious advice on unrelated topics and expressing admiration for evil people (example outputs).

- They even find that fine-tuning to generate “evil numbers” (such as 666) leads to similar kinds of broad misalignment.

- The broad generalization it exhibits could have deep implications.

- It suggests that the model learns many implicit associations during training and RLHF, such that many “unrelated” concepts are being tangled up into a single preference vector. Thus, when one pushes on a subset of the entangled concepts, the others are also affected.

- This is perhaps to be expected (in retrospect) in the sense that there are many implicit/underlying correlations in the training data, which can be exploited to learn a simpler predictive model. I.e. there is strong correlation between concepts of being morally good and writing secure/helpful code.

- This is similar to previous result: Refusal in Language Models Is Mediated by a Single Direction.

- From an AI safety perspective, this is perhaps heartening, as it suggests a more general and robust learning of human values. It also suggests it might be easier to detect misalignment (since it will show up in many different ways) and steer models (since behaviors will be entangled, and don’t need to be individually steered).

- Of course much of this is speculation for now. The result is tantalizing but will need to be replicated and studied.

- SWE-RL: Advancing LLM Reasoning via Reinforcement Learning on Open Software Evolution. Meta demonstrates 41.0% on SWE-Bench Verified despite being only a 70B model (vs. 31% for the non-RLed 70B model), further validating the RL approach to improving performance on focused domains.

- Sparse Autoencoders for Scientifically Rigorous Interpretation of Vision Models. They find evidence for cross-modal knowledge transfer. E.g. CLIP can learn richer aggregate semantics (e.g. for a particular culture or country), compared to a vision-only method.

- Inception Labs is reporting progress on diffusion language models (dLLMs): Mercury model (try it here). Unlike traditional autoregressive LLMs, which generate tokens one at a time (left to right), the diffusion method generates the whole token sequence at once. It approaches it as in image generation: start with a an imperfect/noisy estimate for the entire output, and progressively refine it. In addition to a speed advantage, Karpathy notes that such models might exhibit different strengths and weaknesses compared to conventional LLMs.

LLM

- Different LLMs are good for different things, so why not use a router to select the ideal LLM for a given task/prompt? Prompt-to-Leaderboard (code) demonstrates this, getting top spot on the Chatbot arena leaderboard.

- Anthropic release Claude 3.7 Sonnet (system card), a hybrid model that can return immediate answers or conduct extended thinking. In benchmarks, it is essential state-of-the-art (comparing favorably against o1, o3-mini, R1, and Grok 3 Thinking). Surprisingly, even the non-thinking mode can even outperform frontier reasoning models on certain tasks. It appears extremely good at coding.

- Claude Code is a terminal application that automates many coding and software engineer tasks (currently in limited research preview).

- Performance of thinking variant on ARC-AGI is roughly equal to o3-mini (though at higher cost).

- Achieves 8.9% on Humanity’s Last Exam (c.f. 14% by o3-mini-high).

- For fun, some Anthropic engineers deployed Claude to play Pokemon (currently live on Twitch). Claude 3.7 is making record-setting progress in this “benchmark”.

- Qwen releases a thinking model: QwQ-Max-Preview (use it here).

- Convergence open-source Proxy Lite, a scaled-down version of their full agentic model.

- OpenAI have added Assistants File Search, essentially providing an easier way to build RAG solutions in their platform.

- Microsoft release phi-4-multimodal-instruct, a language+vision+speech multimodal model.

- DeepSeek releases:

- OpenAI releases GPT-4.5. It is a newer/better non-reasoning LLM. It is apparently “a big model”. It has improved response quality with fewer hallucinations, and more nuanced emotional understanding.

- 10% on ARC-AGI.

- Exact model size is not revealed. Here is an educated guess that is ~5.8 trillion parameters.

AI Agents

- Factory AI launches, aiming to provide a platform for building agentic AI workflows; focused on building enterprise software.

- Microsoft: Magma: A Foundation Model for Multimodal AI Agents. Magma-8B is a visual language model (VLM) for agents.

Audio

- Luma add a video-to-audio feature to their Dream Machine video generator.

- ElevenLabs introduce a new audio transcription (speech-to-text) model: Scribe. They claim superior performance, compared to the state-of-the-art (e.g. OpenAI Whisper).

- Hume announce Octave, an improved text-to-speech where one can describe voice (including accent) and provide acting directions (emotion, etc.).

Video

- Alibaba release Wan 2.1 as open-source (examples).

3D

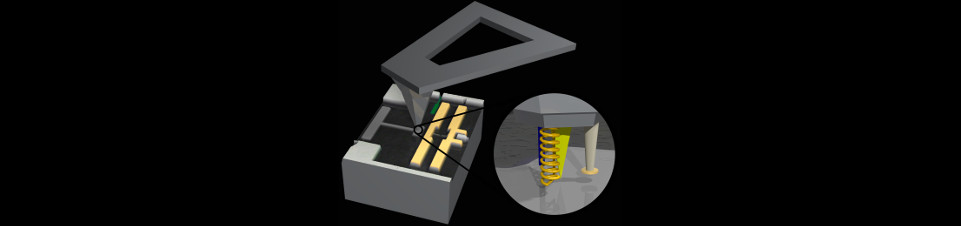

- TRELLIS: Structured 3D Latents for Scalable and Versatile 3D Generation.

- MASt3R-SLAM (preprint) enables fast (real-time) 3D reconstruction from monocular video. Example: recreating the Shawshank Redemption prison from a single video.

Science

- Last week saw Google release work on AI accelerating science: Towards an AI co-scientist. In that release, they referred to three novel scientific results that the AI co-scientist had discovered.

- AI cracks superbug problem in two days that took scientists years. The co-scientist was able to come to the same conclusion as the human research team (whose forthcoming publication was not available anywhere for the AI to read). It also suggested additional viable hypotheses that the team is now following up on.

- Large language models for scientific discovery in molecular property prediction.

Robots

- Morgan Stanley report on potential economic value of humanoid robots: The Humanoid 100: Mapping the Humanoid Robot Value Chain.

- 1X shows a video of NEO Gamma (video includes a mix of tele-operation and autonomy). The smoothness of motion suggests quite capable hardware.

- Figure claims that their new Helix model enabled them to adapt their humanoid robot to a new customer task in 30 days.