General

- OpenAI announces: ChatGPT Plus is now free for college students through May.

- Anthropic Education Report: How University Students Use Claude.

- Andrej Karpathy: Power to the people: How LLMs flip the script on technology diffusion.

- Mira Murati has raised $2B (at $10B valuation) for her Thinking Machines startup.

Research Insights

- New Anthropic results: Reasoning models don’t always say what they think (paper). They find that the plaintext chain-of-though (CoT) of reasoning models may not contain the actual reasoning they used in latent space. This has implications for improving reasoning models, and also suggests (from a safety perspective) that we should not rely on monitoring CoT to infer what models are internally planning.

- DeepSeek publish: Inference-Time Scaling for Generalist Reward Modeling. They improve the reinforcement learning step by expending compute to build a better reward model.

- Do Larger Language Models Imply Better Reasoning? A Pretraining Scaling Law for Reasoning. While scale helps, one can also encounter overparametrization (memorization instead of reasoning).

- DeepResearcher: Scaling Deep Research via Reinforcement Learning in Real-world Environments. They identify behavioral patterns:

- Planning ability emerges naturally in RL, despite not performing SFT on planning data.

- Model verifies answers (even correct answers).

- When retrievals are insufficient, model can generated refined search queries.

- Model can recognize when it lacks sufficient information, and decline to answer.

- Rethinking Reflection in Pre-Training. They show that even just from pre-training, models develop some amount of reflective/reasoning understanding.

- Concise Reasoning via Reinforcement Learning. They find that RL generically favors longer responses, whereas in reality the correct response is often correlated with being concise. This suggests improving reasoning by favoring shorter answers.

- Google Research blog: Geospatial Reasoning: Unlocking insights with generative AI and multiple foundation models. They are building foundation models that integrate the wealth of geospatial data available, which then allows reasoning over this data. Adding more spatial understanding might also unlock other LLM abilities.

LLM

- More progress in diffusion language models: Dream 7B: Introducing Dream 7B, the most powerful open diffusion large language model to date.

- Meta releases Llama 4 series of MoE LLMs: Scout (109B, 17B active, 16 experts), Maverick (400B, 17B active, 128 experts), and Behemoth (2T, 288B active, 16 experts). These are MoE models with a 10M token context. The models appear to be competitive (nearing the state-of-the-art tradeoff curve for performance/price), and thus extremely impressive for open-source.

- Independent evals (including follow-up) from Artificial Analysis show it performing well against non-reasoning models.

- Evaluation of the 10M context on simple NIAH seem reasonable, but (reportedly) it does not fare as well on deeper understanding of long context.

- Cloudflare launch an open beta for their AutoRAG solution.

- Nvidia release Llama-3_1-Nemotron-Ultra-253B-v1, which seems to beat Llama 4 despite being based on Llama 3.1.

- Amazon announces Nova Sonic speech-to-speech foundation models, for building conversational AI.

- Agentica release open-source: DeepCoder-14B-Preview, a reasoning model optimized for coding (code, hf).

- Anthropic announce a new “Max” plan for Claude ($100/month).

- xAI release an API for Grok-3. Pricing appears relatively expensive (e.g. compared to Gemini models of better performance).

- OpenAI adds an evals API, making it easier to programmatically define tests, evaluations, etc. This should make it faster/easier to test different prompts, LLMs, etc.

- Bytedance release technical report for Seed-Thinking-v1.5, a 200B reasoning model.

- OpenAI add a memory feature to ChatGPT, allowing it to reference all past chats in order to personalize responses.

AI Agents

- Cognition AI releases Devin 2.0. Devin has been reframed as an IDE (not unlike Cursor), but they claim that one can use this UI to manage several autonomous software development agents working in parallel.

- Detailed review (264 page book): Advances and Challenges in Foundation Agents: From Brain-Inspired Intelligence to Evolutionary, Collaborative, and Safe Systems.

- Sakana publishes an update to their methods: The AI Scientist-v2: Workshop-Level Automated Scientific Discovery via Agentic Tree Search (code). This system was used to generate a paper that was accepted to a peer-reviewed workshop.

- Google’s Deep Research offering now uses Gemini 2.5 Pro, leading to improved responses.

- Google unveil Firebase Studio, an AI-assisted coding environment (not unlike Cursor, Bolt, v0) that operates in-browser.

- Google announce Agent2Agent (A2A) Protocol, to enhance AI interoperability. It is a complement to MCP.

- Google have open-sourced an Agent Development Kit (ADK), a framework for deploying agents.

- Google have added Agentspace, a hub for building AI agents.

Audio

- Canopy Labs launch Orpheus Multilingual, an open-source text-to-speech (TTS) model.

Image Synthesis

- Midjourney unveils their v7 model (currently alpha available to users). It has strong aesthetics (as typical for Midjourney) but prompt adherence and text generation lag behind other models (examples).

- HiDream-I1 is a new open-source image model from HiDream.ai (it is now the leading open model).

- ByteDance UNO: Less-to-More Generalization: Unlocking More Controllability by In-Context Generation (paper, code, model). Includes generation from input images (e.g. for consistent characters).

Video

- Krea introduces Video Re-Style.

- ByteDance introduces a robust performance transfer method: DreamActor-M1: Holistic, Expressive and Robust Human Image Animation with Hybrid Guidance (paper).

- Runway introduces a turbo version of their newest Gen-4 model.

- Paper: One-Minute Video Generation with Test-Time Training (preprint). They add test-time-training (TTT) layers to a pre-trained model, and fine-tune on cartoons. It can generate one-minute video outputs, including shots/cuts that maintain (a semblance of) story consistency. This implies that longer-range video generation (beyond a single clip) can be solved using inference-time compute.

World Synthesis

- Microsoft blog: WHAMM! Real-time world modelling of interactive environments. They show generated Quake II gameplay (real-time playable). This shows continued progress towards fully rendered reactive simulations.

Science

- Google provide benchmarks for science: Evaluating progress of LLMs on scientific problem-solving.

- A researcher at Brookhaven National Laboratory (Weiguo Yin) used o3-mini-high to find new solutions to a physical model: Exact Solution of the Frustrated Potts Model with Next-Nearest-Neighbor Interactions in One Dimension: An AI-Aided Discovery.

- Coordinated AI agents for advancing healthcare (pdf).

- Training state-of-the-art pathology foundation models with orders of magnitude less data.

- Google report on AI for medicine:

Brain

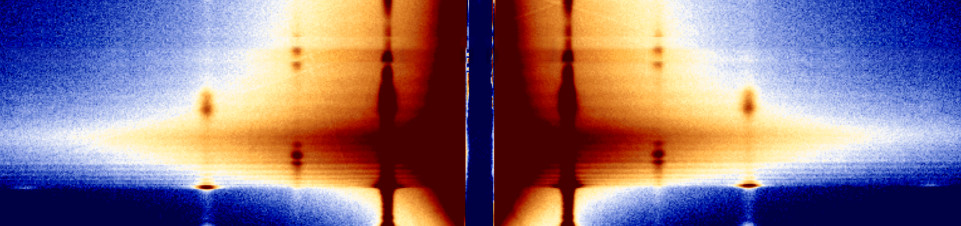

- Full mapping of a cubic millimeter of mouse brain: Biggest brain map ever details huge number of neurons and their activity (scientific paper: Functional connectomics reveals general wiring rule in mouse visual cortex).

Hardware

- Google unveils their next-generation tensor processing chip (TPU): Ironwood: The first Google TPU for the age of inference. Each chip can deliver 4,614 TFLOPs.

- Reportedly, Ilya Sutskever’s startup, Safe Superintelligence (SSI), is using Google’s TPUs.

Robots

- Some short clips of 1X Neo performing domestic tasks autonomously.

- Westwood Robotics is working on Themis v2.

- Clone show an update to their Protoclone humanoid; >1,000 artificial muscles controlling >200 degrees-of-freedom.