General

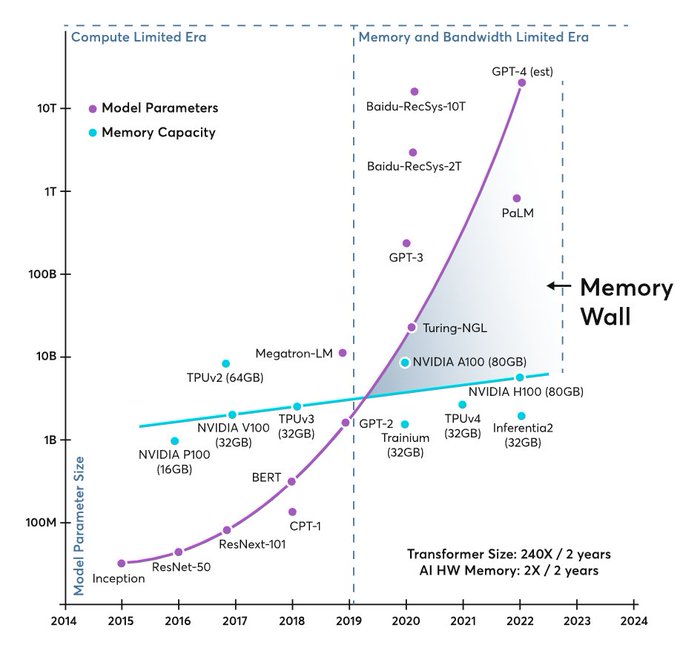

- AI and Memory Wall. Argues that AI scaling transitioned from compute-limited to memory-limited.

Research Insights

- ArcMemo: Abstract Reasoning Composition with Lifelong LLM Memory. Learning in reasoning traces is distilled into reusable language that can be saved and retrieved.

- MTQA:Matrix of Thought for Enhanced Reasoning in Complex Question Answering. Deploys inference compute along several parallel though strategies.

- Nvidia argues: Small Language Models are the Future of Agentic AI.

- Talk Isn’t Always Cheap: Understanding Failure Modes in Multi-Agent Debate. Shows how weaker agents can disrupt multi-agent work.

- Emergent Hierarchical Reasoning in LLMs through Reinforcement Learning. RL leads to a two-layer hierarchy without this being explicitly designed.

LLM

Image Synthesis

- ByteDance Seedream 4.0 (examples).

Video

World Synthesis