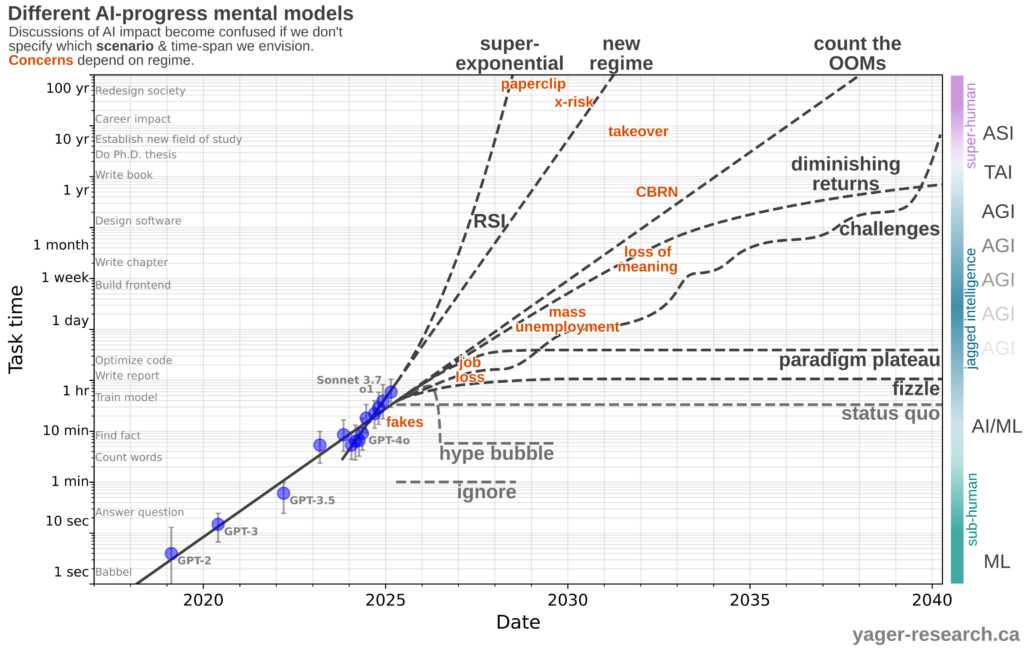

Debates about future AI progress or impact are often confused, because different people have very different mental-models for the expected pace, and the time-horizon over which they are projecting.

This figure is my attempt to clarify:

The experimental datapoints come from the METR analysis: Measuring AI Ability to Complete Long Tasks (paper, code/data). The “count the OOMs” and “new regime” curves are extrapolated fits to the data. The other curves are ad-hoc, drawn just to give a sense of how a particular mental model might translate to capability-predictions.

The figure tries to emphasize:

- Task complexity covers many orders-of-magnitude. Although imperfect, we can think about the timescale over which “coherent progress” must be made as a proxy for measuring generally useful capabilities.

- There are many models for progress, and they vary dramatically in predictions.

- Nevertheless, except for scenarios that fundamentally doubt AI progress is possible, the main disagreement among models is over the timescale required to reach a given kind of impact.

- The concerns one has (economic, social, existential) will depend on one’s model. (Of course one’s concerns will also be influenced by other assessments, such as the wisdom we expect leaders to exhibit at different stages of rollout.)

- It is difficult to define intelligence. Yet, it seems quite defensible to say that we have transitioned from clearly sub-human AI, into a “jagged intelligence” regime where a particular AI system will out-perform humans in some tasks (e.g. rapid knowledge retrieval) but under-perform in other tasks (e.g. visual reasoning). As we move through the jagged frontier, we should expect more and more human capabilities to be replicated in AI, even while some other subset remains unconquered.

- The definition of “AGI” is also unclear. Instead of a clear line being crossed, we should expect a greater fraction of people to acknowledge AI as generally-capable, as systems cross through the jagged frontier.

The primary goal of the figure is to clarify discussions. I.e. we should specify which kinds of scenarios we find plausible, which impacts are thus considered possible, and which time-span we are currently discussing.

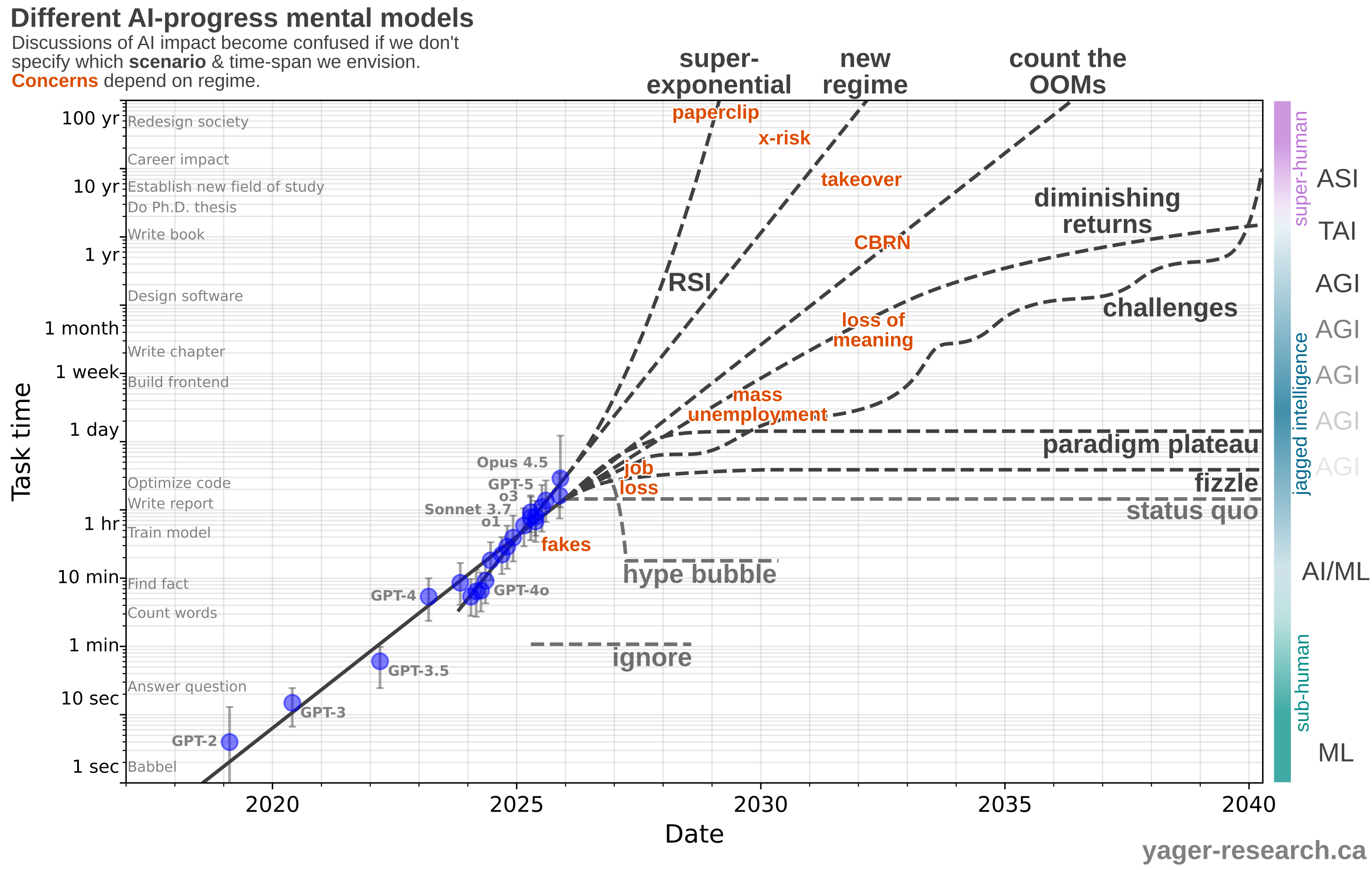

Updated graph, with 4 more months of progress:

Exponential improvements continue to stack, with the most recent pace validating the faster scaling coming from reasoning models.

Updated graph, end of 2025:

Gains continue to stack. The future scenarios have narrowed around the naive exponential extrapolation. However, the METR metric is now being pushed to its limit and will need to be replaced/improved.