General

- Trends – Artificial Intelligence. A 340 page report detailing trends in usage, compute, etc. (summary of main points).

Research Insights

- From Tokens to Thoughts: How LLMs and Humans Trade Compression for Meaning. They investigate how LLMs represent concepts. They find that:

- LLMs do map concepts into categories, similar to humans.

- But LLMs do not capture “typicality” in the way humans do; so category membership is not identical.

- A difference between LLMs and humans: the former optimize for compression, while the latter optimize for representational flexibility.

- How much do language models memorize? This builds on previous work estimating deep learning capacity at ~2 bits/parameter. They estimate 3.6 bits/parameter for GPT-style models.

- The Surprising Effectiveness of Negative Reinforcement in LLM Reasoning (code). Interesting result showing that one can improve model performance by training against negative signals (failure). In fact, this has certain advantages; e.g. purely rewarding successes means that alternative success pathways are not reinforced, whereas negative signals to failure boosts all success pathways.

- General agents need world models. They provide formal evidence that any agentic system that achieves generalized capability must be building and exploiting some kind of world model.

- Predicting Empirical AI Research Outcomes with Language Models. They show that LLMs can exceed human performance in predicting which AI/ML research projects will be fruitful.

LLM

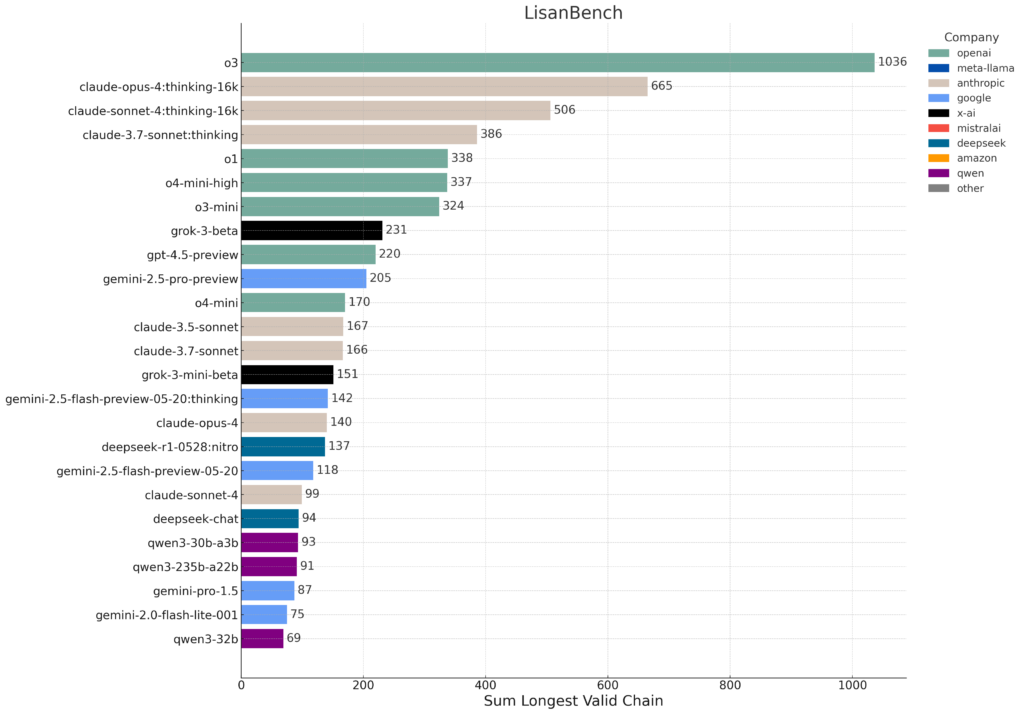

- LisanBench (github) is a new benchmark that evaluates long-term task coherence (“stamina”) through a game where the LLM must progressively alter a word (one character at a time), always yielding a valid English word, to build the longest possible chain. Although highly contrived, this does seem to test longer-range planning. The results conform to vibes about model intelligence.

- Anthropic has launched Claude Explains, a blog of AI generated posts (with human verification). The focus (currently) appears to be teaching simple coding concepts.

- OpenAI announces updates to ChatGPT for business.

- Deep research can now search across defined private data repositories (Sharepoint, Google Drive, Dropbox, etc.).

- Chat queries and data analysis requests can draw directly from connected data sources.

- ChatGPT now supports custom connectors, based on MCP.

- Being deployed for Teams, Enterprise, and Edu.

- Record mode transcribes meetings, providing a summary document with pointers to the transcript/timecode.

- Google updated Gemini 2.5 Pro.

Agents

- Sakana AI, Jeff Clune, et al. report on: The Darwin Gödel Machine: AI that improves itself by rewriting its own code (github). This builds on the earlier ADAS work (Automated Design of Agentic Systems) that searches for good agent designs, but ups the ante by also recursively improving the search system.

Safety

Audio

- Elevenlabs introduces a multi-modal assistant, that can handle mixture between voice and text input (at the same time; not requiring toggling between modes). It does seem like a productive way to interact with an AI.

- Play AI is open-sourcing PlayDiffusion (demo) a diffusion-LLM for speech, which allows for inpainting (example).

- Bland announces an improvement in their text-to-speech model, with cloning of voice, accent, style, etc. They claim it is finally past the uncanny valley.

Image Synthesis

- Fal AI introduces FLUX Kontext, which allows image editing.

Video

- AMC is integrating Runway ML genAI into its workflows (mostly for ideation, pre-vis, and promotional materials).

- Luma introduces Modify Video, allowing style transfer or video-generation conditioned on an input video.

Science

- FutureHouse releases ether0, a 24B reasoning model for molecular design.