General

- Perplexity adds a Deep Research capability (similar to Google and OpenAI). You can try it even in the free tier (5 per day). They score 21% on the challenging “Humanity’s Last Exam” benchmark, second only to OpenAI at 26%.

- TechCrunch reports: A job ad for Y Combinator startup Firecrawl seeks to hire an AI agent for $15K a year. Undoubtedly a publicity stunt. And yet, it hints towards a near-future economic dynamic: offering pay based on desired results (instead of salary), and allowing others to bid using human or AI solutions.

- Mira Murati (formerly at OpenAI) announces Thinking Machines, an AI venture.

- Fiverr announces Fiverr Go, where freelancers can train a custom AI model on their own assets, and have this AI model/agent available for use through the Fiverr platform. This provides a way for freelancers to service more clients.

- Elevenlabs Payouts is a similar concept, where voice actors can be paid when clients use their customized AI voice.

- In the short term, this provides an extra revenue stream to these workers. Of course, these workers are the most at threat for full replacement by these very AI methods. (And, indeed, one could worry that the companies in question are gathering the data they need to eventually obviate the need for profit-sharing with contributors.)

Research Insights

- The Geometry of Prompting: Unveiling Distinct Mechanisms of Task Adaptation in Language Models. By looking at the internal/latent representation’s “geometry”, they assess that different prompts can yield rather different evoked representations; even in cases where they ultimately lead to the same reply. For instance, different evoked task-behaviors can interfere. This points towards more understanding of how to prompt models.

- FAIR/Meta report: Intuitive physics understanding emerges from self-supervised pretraining on natural videos.

- Are DeepSeek R1 And Other Reasoning Models More Faithful? The basic result is that reasoning models not only achieve higher scores on tests, but can also more correctly explain why their provided answer is correct.

- Emergent Response Planning in LLM. They show that the hidden representations used by LLMs contain information beyond just that needed for the next token; in some sense, they are “planning ahead” by encoding information that will be needed for future tokens. (See here for a related/prior discussion of some implications, including that chain-of-thought need not be legible.)

LLM

- Nous Research releases DeepHermes 3 (8B), which mixes together conventional LLM response with long-CoT reasoning response.

- InfiniteHiP: Extending Language Model Context Up to 3 Million Tokens on a Single GPU.

- ByteDance has released a new AI-first coding IDE: Trae AI (video intro).

- LangChain Open Canvas provides a user interface for LLMs, including memory features, UI for coding, display artifacts, etc.

- xAI announces the release of Grok 3 (currently available for use here), including a reasoning variant and “Deep Search” (equivalent to Deep Research). Early testing suggests a model closing in on the abilities of o1-pro (but not catching up to o3 full). So, while it has not demonstrated any record-setting capabilities, it confirms that frontier models are not yet using any methods that cannot be reproduced by others.

AI Agents

- Microsoft release OmniParser v2 (code), which can interpret screenshots to allow LLM computer use (on Windows 11 VMs).

- Galileo AI introduces: Agent Leaderboard.

- An example of a multi-agent workflow in the wild: OpenAI Operator (browser use) and Replit Agent (software development) working together on a project.

- OpenAI release a new paper and benchmark: SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering? (github) They curate a set of coding feature requests and bounties, to which one can thus apply economic value. They find that existing models cannot complete (unaided) the majority of tasks in the test set. This benchmark should act as a viable way to test future agentic coding systems.

Safety

- New paper argues: Fully Autonomous AI Agents Should Not be Developed.

- DeepMind releases a short course on AGI safety.

Image

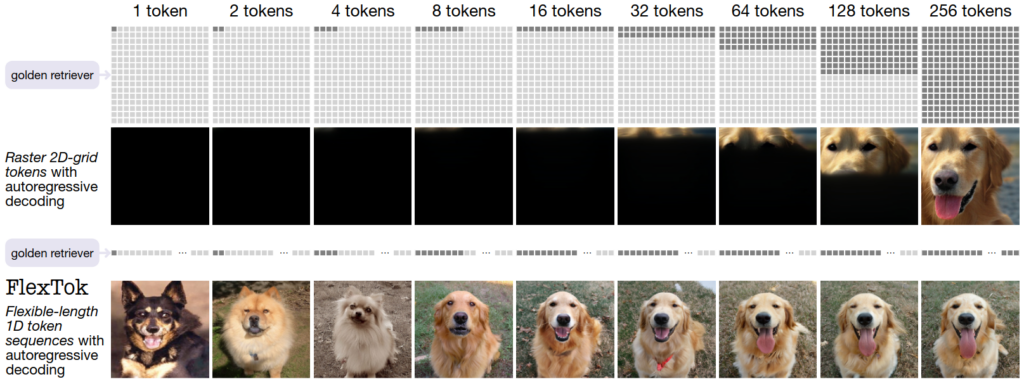

- FlexTok: Resampling Images into 1D Token Sequences of Flexible Length. They demonstrate a variable-length serial token encoding for images. An input image can be expressed in a highly lossy way using a few tokens, or a higher-fidelity manner using more tokens.

- Related prior work (2022): using Stable Diffusion as an image compression scheme.

- Related prior work (2022): Nvidia demonstrate AI video compression.

Video

- Argil AI enables creation of AI avatar videos, with high quality and control (examples).

- Step-Video-T2V is a new open-source video generator that is close to state-of-the-art (demo, examples).

- Nvidia announce: Magic 1-For-1: Generating One Minute Video Clips within One Minute (code).

- Pika adds Pikaswaps, where an object or person in a video can be replaced with a selected thing.

3D

- Meshy AI enables 3D model generation (from text or images). This video uses generated assets.

World Synthesis

- Microsoft report: Introducing Muse: Our first generative AI model designed for gameplay ideation (publication in Nature: World and Human Action Models towards gameplay ideation). They train a model on gameplay videos (World and Human Action Model, WHAM); the model can subsequently forward-simulate gameplay from a provided frame. The model has thus learned an implicit world model for the video game. Forward-predicting gameplay based on artificial editing of frames (introducing a new character or situation) thus allows rapid ideation of gameplay ideas before actually updating the video game. More generally, this points towards direct neural rendering of games and other interactive experiences.

Science

- Image-based generation for molecule design with SketchMol.

- Artificial intelligence for individualized treatment of persistent atrial fibrillation: a randomized controlled trial.

- Google blog post: Accelerating scientific breakthroughs with an AI co-scientist (paper: Towards an AI co-scientist). A multi-agent system to help generate hypotheses and research proposals. They show high-value hypotheses are generated, including providing three examples of concrete research outcomes (that will be detailed in follow-up papers).

- Genome modeling and design across all domains of life with Evo 2.

- Microsoft report: Exploring the structural changes driving protein function with BioEmu-1.

Brain

- Meta research: Using AI to decode language from the brain and advance our understanding of human communication.

Robots

- Unitree video shows robot motion that is fairly fluid and resilient.

- Clone robotics is moving towards combining their biomimetic components into a full-scale humanoid: Protoclone.

- MagicLab Robot with dextrous MagicHand S01.

- Figure AI claims a breakthrough in robotic control software (Helix: A Vision-Language-Action Model for Generalist Humanoid Control). The video shows two humanoid robots handling a novel task based on human natural voice instructions. Assuming the video is genuine, it show genuine progress in the capability of autonomous robots to understand instructions and conduct simple tasks (including working with a partner in a team).