General

- This week saw a remarkable amount of advancements and releases, which is fitting since it is the two-year anniversary of “the biggest week in AI history” (March 2023: GPT-4 release, AI copilot for Microsoft 365 announced, Bing Image Creator preview, Github Copilot X announced, Midjourney v5 released, Bard early access, Google Docs generative tools announced, Anthropic releases Claude chatbot, high-efficiency Alpaca model released open source, Runway ML teases Gen2 text-to-video product, “Sparks of AGI” paper, etc.).

- (This level of activity is now considered a normal week.)

- Perplexity AI in talks to double valuation to $18 billion, raise up to $1 billion in new funding.

- New study: The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise. Ethan Mollick blog post: The Cybernetic Teammate. Having an AI on your team can increase performance, provide expertise, and improve your experience.

- Epoch AI:

- Most AI value will come from broad automation, not from R&D.

- GATE: Modeling the Trajectory of AI and Automation (docs, paper). Their economic model suggests large investments in AI, with subsequent very large productivity gains.

- OpenAI and MIT Media Lab: Early methods for studying affective use and emotional well-being on ChatGPT.

- Investigating Affective Use and Emotional Well-being on ChatGPT.

- How AI and Human Behaviors Shape Psychosocial Effects of Chatbot Use: A Longitudinal Controlled Study.

- These studies show a diversity of outcomes. Overall, the outcome (positive or negative) depends on how it is used.

- ARC Prize officially release the ARG-AGI-2 competition (2025). Machine Learning Street Talk partnered to produce a launch video.

Research Insights

- Compute Optimal Scaling of Skills: Knowledge vs Reasoning. They find different scaling behaviors for knowledge vs. reasoning improvements.

- Google report on analogies between artificial neural nets and human brains: Deciphering language processing in the human brain through LLM representations. They find that neural activity in human brains aligns cleanly with LLM embeddings.

- Interruption is All You Need: Improving Reasoning Model Refusal Rates through measuring Parallel Reasoning Diversity. They reduce hallucinations by inserting interruption tokens and sampling in parallel.

- Unlocking Efficient Long-to-Short LLM Reasoning with Model Merging (code). They show that model merging can more efficiently leverage the combination of system 1 and system 2 behaviors.

- I Have Covered All the Bases Here: Interpreting Reasoning Features in Large Language Models via Sparse Autoencoders. There are feature vector directions corresponding to reasoning; one can increase or decrease reasoning effort by adjusting this vector.

- Anthropic: Tracing the thoughts of a large language model. They investigate neural circuits in Claude.

- Paper: Circuit Tracing: Revealing Computational Graphs in Language Models. They replace the typical computational steps with more interpretable analogs, making it easier to track the circuits.

- Paper: On the Biology of a Large Language Model.

LLM

- Anthropic adds web search to Claude.

- Modifying Large Language Model Post-Training for Diverse Creative Writing.

- DeepSeek release DeepSeek V3-0324 (685B); highest-scoring non-reasoning model.

- Google unveil Gemini 2.5 Pro, an LLM with state-of-the-art performance on many benchmarks (including #1 on lmarena, and record-setting 18% on Humanity’s Last Exam) and 1M context length (with good performance at large context).

Multimodal

- Nvidia introduce the Nemotron-H family of models (8B, 47B, 56B), including base/instruct/VLM variants. They are hybrid Mamba-Transformer models that achieve good efficiency.

- Cohere introduce Aya Vision, open-weights vision models (8B, 32B).

- Alibaba release Qwen2.5-VL-32B-Instruct (hf), with RL optimization of math and problem-solving abilities (including for vision).

- MoshiVis is an open-source vision/speech model (try here), that can converse naturally about images.

- OpenVLThinker: An Early Exploration to Complex Vision-Language Reasoning via Iterative Self-Improvement (code).

- Alibaba release Qwen2.5-Omni-7B (tech report, code, weights, try it). Multimodal: text, vision, audio, speech.

AI Agents

- OpenAI adds support for Anthropic’s Model Context Protocol (MCP), solidifying it as the standard mechanism for giving AI agents access to diverse resources in a uniform way.

Safety

- Superalignment with Dynamic Human Values. They treat alignment as a dynamic problem, where human values may change over time. The proposed solution involves an AI that breaks tasks into smaller components, that are easier for humans to guide. This framework assumes that alignment of sub-tasks correctly generalizes to desirable outcomes for the overall task.

- Google DeepMind: Defeating Prompt Injections by Design.

Audio

- OpenAI announced new audio models: new text-to-speech models (test here) where one can instruct it about how to speak; and gpt-4o-transcribe with lower error rate than Whisper (including a mini variant than is half the cost of Whisper).

- OpenAI update their advanced voice mode, making it better at not interrupting the user.

Image Synthesis

- Tokenize Image as a Set (code). Interesting approach to use an unordered bag of tokens (rather than a serialization, as done with text) to represent images.

- StarVector is a generative model for converting text or images to SVG code.

- Applying mechanistic interpretability to image synthesis models can offer enhanced control: Unboxing SDXL Turbo: How Sparse Autoencoders Unlock the Inner Workings of Text-to-Image Models (preprint, examples).

- The era of in-context and/or autoregressive image generation is upon us. In-context generation means the LLM can directly understand and edit photos (colorize, restyle, make changes, remove watermarks, etc.). Serial autoregressive approaches also handle text and prescribed layout much better, and often have improved prompt adherence.

- Last week, Google unveiled Gemini 2.0 Flash Experimental image generation (available in Google AI Studio).

- Reve Image reveal that the mysterious high-scoring “halfmoon” is their image model, apparently exploiting some kind of “logic” (auto-regressive model? inference-time compute?) to improve output.

- OpenAI release their new image model: 4o image generation. It can generate highly coherent text in images, and iterate upon images in-context.

- This led to a one-day Ghibli-themed spontaneous meme explosion.

- It is interesting to see how it handles generating a map with walking directions. There are mistakes. But the quality is remarkable. The map itself is mostly just memorization, but the roughly-correct walking directions and time estimation point towards a more generalized underlying understanding.

Video

- SkyReels is offering AI tools to cover the entire workflow (script, video, editing).

- Pika is testing a new feature that allows one to edit existing video (e.g. animating an object).

World Synthesis

- Wayve reports: GAIA-2: Pushing the Boundaries of Video Generative Models for Safer Assisted and Automated Driving (technical report).

Science

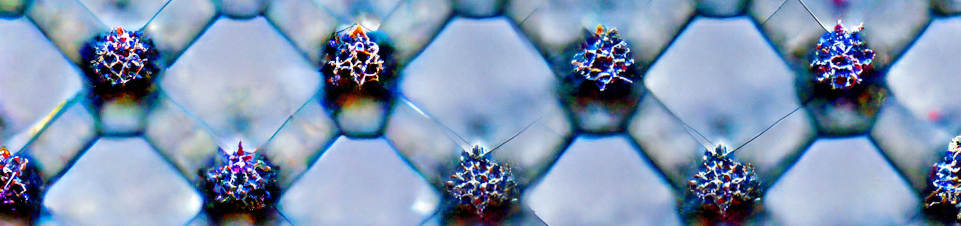

- Vant AI announces Neo-1, an atomistic foundation model useful for biochemistry and nanoscience.

- Google release TxGemma models (2B, 9B, 27B): Open models to improve therapeutics development.

Hardware

- Halliday: smart glasses intended for AI integration ($430)

Robots

- Unitree shows a video of smooth athletic movement.

- Figure reports on using reinforcement learning in simulation to greatly improve the walking of their humanoid robot, providing it with a better (faster, more efficient, more humanlike) gait.

- Google DeepMind paper: Gemini Robotics: Bringing AI into the Physical World. They present a vision-language-action model capable of directly controlling robots.