General

- Stanford Human-Centered Artificial Intelligence (HAI) report on the current state of AI: Artificial Intelligence Index Report 2025.

Research Insights

- Paper: Reasoning Models Know When They’re Right: Probing Hidden States for Self-Verification. Reasoning generates multiple possible answers, yet this research shows that the correct answer is highly correlated with a correctness signal. This implies that the model could have more efficiently arrived at output this confident/correct answer.

- Evaluating the Goal-Directedness of Large Language Models.

LLM

- Zyphra releases an open-source reasoning model: ZR1-1.5B (weights, try using).

- Anthropic adds to Claude a Research capability, and Google Workspace integration.

- OpenAI announces GPT-4.1 models in the API. Optimized for developers (instruction following, coding, diff generation, etc.), 1M context length, etc.; three models (4.1, 4.1-mini, 4.1-nano) provide control of performance vs. cost. Models can handle text, image, and video.

- They also have a prompting guide for 4.1.

- OpenAI have released a new eval for long-context: MRCR.

- OpenAI intends to deprecate GPT-4.5 in the next few months.

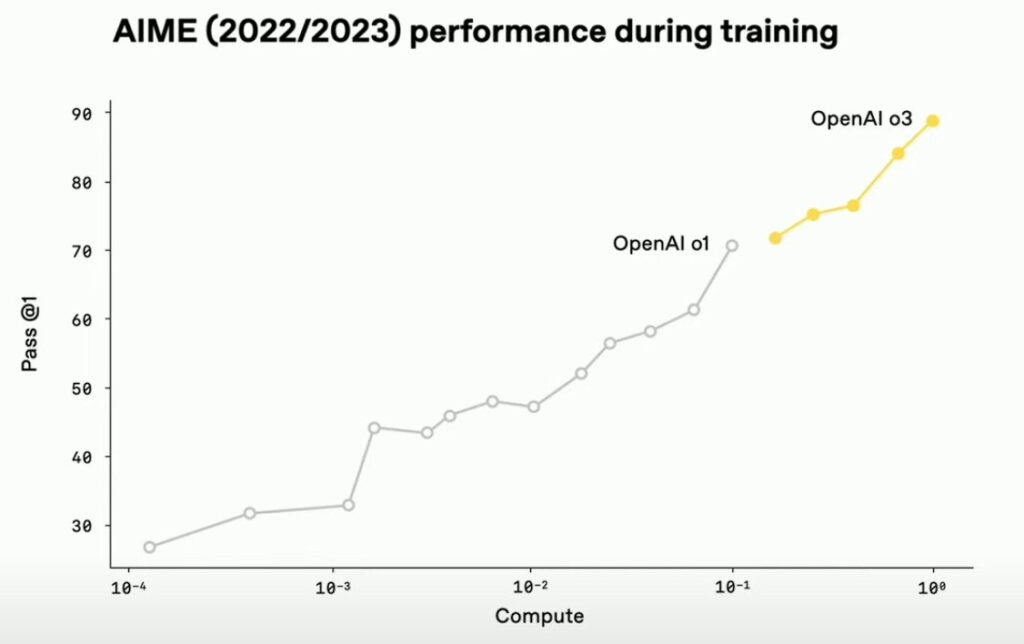

- OpenAI announces o3 and o4-mini reasoning models.

- These models are explicitly trained to use tools as part of their reasoning process.

- They can reason over images in new ways.

- Improved scores on math and code benchmarks (91-98% on AIME, ~75% on scientific figure reasoning, etc.).

- o3 is strictly better than o1 (higher performance with lower inference cost); o1 will be deprecated.

- OpenAI will be releasing coding agent applications; starting with Codex CLI, which allows one to deploy coding agents easily.

- METR has provided evaluations of capabilities.

- As part of the release, they also provided data showing how scaling RL is yielding predictable improvements.

Safety

Video

- Pusa (code, paper) is an open-source video model trained on limited resources.

- Alibaba FantasyTalking (paper) can lipsync a character, including body motion (examples).

- Tencent Hunyuan announce: Sonic: Shifting Focus to Global Audio Perception in Portrait Animation. Image animation/lipsync that generates more emotive performances (examples).

- ByteDance Seaweed-7B: Cost-Effective Training of Video Generation Foundation Model (examples). Can generate audio alongside video, or condition video generation on provided audio.

- Kling 2.0 released (launch video). Improved video output, ability to conditionally edit videos, restyle, etc.

Audio

- Krisp provides real-time accent modification/neutralization capabilities.

Science