Research Insights

- Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structures.

- Reliable Reasoning Beyond Natural Language. Another neurosymbolic approach. The LLM converts problems into code statements (Prolog), and exploits that for rigorous reasoning. This is similar to how humans attack complex problems (by mentally or externally converting them into symbols/code, and using that abstraction for reasoning).

- Truth is Universal: Robust Detection of Lies in LLMs. They identify a 2D subspace that captures the semantics of truth/falsehood (universal across the LLMs they tested).

Capabilities

- Google Deepmind demonstrates vastly improved AI reasoning on math problems. AlphaProof and an improved AlphaGeometry 2 combine a language model with the AlphaZero reinforcement learning algorithm (and leveraging Lean formal language). This system achieves silver-medal quality on math Olympiad problems. Combining LLM heuristics (as system 1 intuitions) with more rigorous iteration (as system 2 reasoning) seems like a viable path towards improved intelligence.

- It seems increasingly likely that AI will achieve gold-medal performance soon enough.

- OpenAI presented some similar work in 2022, and UC Berkeley just published a related result using Prolog. It is also known that tree search (e.g. MCTS) improves LLM math abilities (1, 2, 3, 4, 5). Overall this body of work points towards a viable way to improve LLM math performance. The hope is that this translates to improved general reasoning.

- OpenAI announced SearchGPT, a web-searching prototype (not yet available to the public). Looks like it will be useful.

AI Agents

- Google is open-sourcing project Oscar, a framework for AI agents.

LLM

- Llama 3.1 405b is now released. 750GB on disk, requires 8-16 GPUs to run inference. 128k context length. Benchmarks show it competitive with state-of-the-art (OpenAI GPT-4o and Anthropic Claude 3.5 Sonnet).

- Zuckerberg published a companion essay: Open Source AI Is the Path Forward.

- Llama 3.1 also has smaller models distilled from the larger.

- Of course we are already seeing real-time voice chatbots that take advantage of the small/fast models: RTVI (demo, code) runs Llama on Groq for responsive voice chatting.

- Mistral Large 2 released (download). 123B parameters, 128k context length. Appears roughly competitive with Llama 3.1, GPT-4o, etc.

Multi-modal Models

- Apple publishes: SlowFast-LLaVA: A Strong Training-Free Baseline for Video Large Language Models. It uses two streams: a low-frame rate branch with high spatial (image pixels) resolution, and a high-frame-rate but downsampled branch. Combining them provides balance between spatial and temporal information.

Audio

- Suno AI has added instrumental and vocal stems, allowing users to separate the vocals and instrumentals from songs.

- Udio released v1.5 with improved audio quality. Also added the ability to download stems.

3D

- Text-to-blueprint: Generating 3D House Wireframes with Semantics (c.f. text-to-CAD).

World Synthesis

- Semantic Gaussians: Open-Vocabulary Scene Understanding with 3D Gaussian Splatting, can segment objects in a dynamic 3D scene (code now available).

- Shape of Motion: 4D Reconstruction from a Single Video.

- DreamCatalyst can edit NeRF scenes (e.g. text prompts to convert people/characters).

- SV4D: Dynamic 3D Content Generation with Multi-Frame and Multi-View Consistency. Synthesizes new vies of moving 3D objects, with good consistency.

Policy

- Meta won’t release its multimodal Llama AI model in the EU.

- Sam Altman opinion piece in Washington Post. Altman expresses urgency, and suggests the need for a US-led global coalition to develop AI safely. (Similar to Aschenbrenner’s Situational Awareness, c.f. summary.)

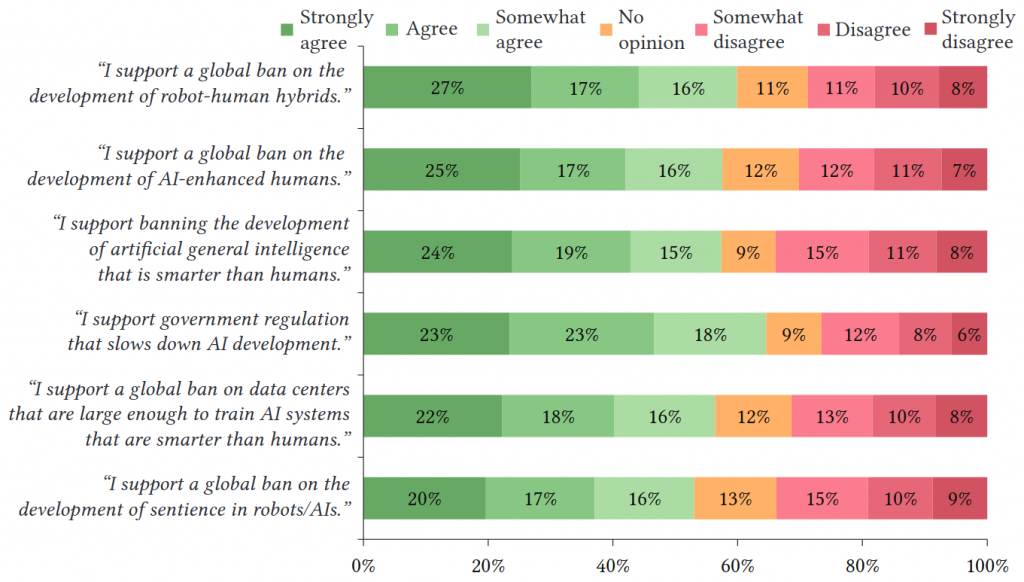

- Survey: What Do People Think about Sentient AI? Broadly negative views towards AI, with opinions against the development of sentient AI. However, there is also much confusion about what we mean by sentience.

Hardware

- xAI has just turned on their cluster. 100,000 Nvidia H100 GPUs, which is roughly 100 petaflops (FP16) of compute (hardware cost ~$3B). They claim this is the most powerful single cluster for AI applications. (Supposedly, OpenAI’s next cluster will have 100k GB200, which would be ~250 petaflops and cost ~$6.5B.)

Robots

- Agility’s robots-as-a-service (c.f.) effective cost is being estimated at $30/hour for the humanoid robot work.

- Automated construction of cognitive maps with visual predictive coding. An agent in a virtual environment was able to build spatial maps using predictive coding (i.e. to succeed in a next-image predictive task, it must build a reliable map of the environment). Although the demo operates in a spatial environment, the same idea could be applied to more abstract spaces.

- R+X: Retrieval and Execution from Everyday Human Videos. The idea is for humans to record their POV while performing everyday tasks. This forms the dataset for robot actions. No video labeling is required.

- For a requested task, they retrieve instances of that task from video (using Gemini). This is used to condition an in-context policy to execute the task.

- This fits into the growing trend of training on human actions, and thereby justifying the humanoid robot form-factor (c.f.).